Abstract

This paper discusses CAVE6D, a tool to collaboratively visualize multidimensional, time-varying, environmental data in the CAVE / Immersadesk Virtual Reality environments. It describes the work in building CAVE6D, and ways in which it provides effective collaboration and interaction between multiple users in a visualization session.

1. Introduction

In recent years there has been a rapid increase in the capability of environmental observation systems to provide high resolution spatial and temporal oceanographic data from estuarine and coastal regions. Similar advances in computational performance, development of distributed applications and the use of data sharing via national high performance networks enable scientists to now attack previously intractable large scale environmental problems through modeling and simulation. Research in these high performance computing technologies for computational and data intensive problems is driven by the need for increased understanding of dynamical processes on fine scales, improved ecosystem monitoring capabilities and the management of and response to environmental crises such as pollution containment, storm preparation and biohazard remediation.

A few in the list of a vast number of emerging applications enabling visualization of large scientific datasets are notably IRIS Explorer, AVS, and Khoros. However all of these systems are designed with an assumption of a single user. Today such an assumption is highly improper. As Jason Wood et. al [9] put it, "modern research is rarely a one-person task; it is carried out by large teams, often multi-disciplinary, each member of the team bringing different skills to the table". People could be geographically spread, and would need some way to analyze and discuss various issues and results. Scientists, educators, students and managers must have the ability to collaboratively view, analyze and interact with the data in a way that is understandable, repeatable and intuitive. We believe that the most efficient method to do this is through the use of a tele-immersive environment (TIE) that allows the raw data to become usable information and then cognitively useful knowledge.

We define the term Tele-Immersion as the integration of audio and video conferencing with persistent collaborative virtual reality (VR) in the context of data-mining and significant computation. When participants are tele-immersed, they are able to see and interact with each other in a shared environment. This environment persists even when all the participants have left. The environment may control supercomputing computations, query databases autonomously and gather the results for visualization when the participants return. Participants may even leave messages for their colleagues who can then replay them as a full audio, video and gestural stream. Clearly, the near real-time feedback provided by TIEs have the potential to change the way data or model results are viewed and interpreted, the resulting information disseminated and the ensuing decisions or policies enacted.

In this direction, our development efforts have resulted in the merging of an application for visualizing environmental data, CAVE5D, with the CAVERNSoft TeleImmersive system[3,4,5].

2. CAVE5D

Cave5D, co-developed by Wheless and Lascara from Old Dominion University and Dr. Bill Hibbard from the University of Wisconsin, is a configurable Virtual Reality application Framework.

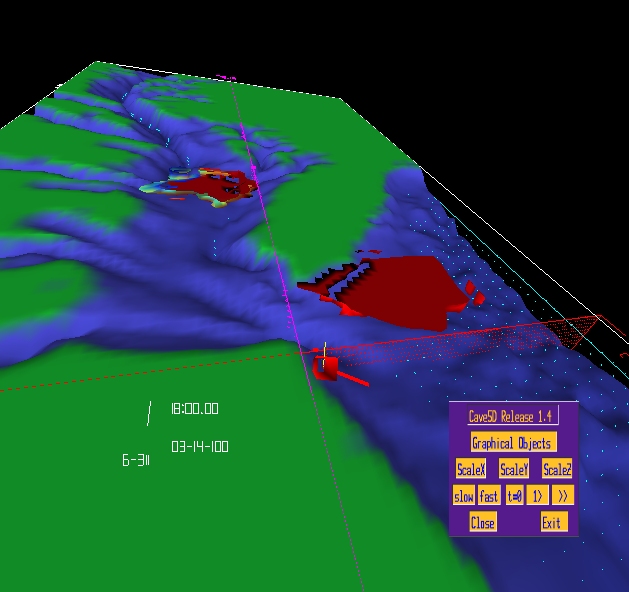

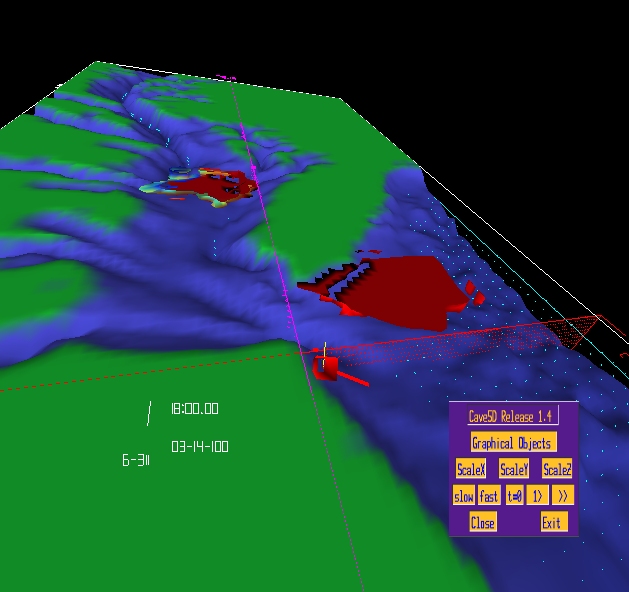

Cave5D is supported by Vis5D [2], a very powerful graphics library that provides visualization techniques to display multi-dimensional numerical data from atmospheric, oceanographic, and other similar models, including isosurfaces, contour slices, volume visualization, wind/trajectory vectors, and various image projection formats. Figure 1 shows Cave5D being used to view results from a numerical model of circulation in the Chesapeake Bay. The Cave5D framework integrates the CAVE [1] VR software libraries (see http://www.vrco.com) with the Vis5D library in order to visualize numerical data in the Vis5D file format on the ImmersaDesk or CAVE, and enables user interaction with the data. The Vis5D file format consists of data in the form of a five dimensional rectangle - 3 dimensions for the spatial grid, a time dimension and a dimension which is an enumeration of multiple physical variables such as contour slices, iso-surfaces, vectors, etc. as mentioned above. A set of Interface Panels, enable the user to activate the objects to be visualized, control the time clock, or change the x, y, z scales of the world. Hence CAVE5D as it existed was an application that provided visualization and interaction with the data. It however, did not provide any form of synchronous / asynchronous means to interact together with other people

Figure1. CAVE5D being used to show the visualization of the numerical models at the Chesapeake Bay.

3. CAVE6D - Collaborative extension of CAVE5D

Cave6D emerges as an integration of CAVE5D with CAVERNsoft [3,4,5] to produce a TIE that allows multiple users of CAVE5D to share a common data-set and interact with each other simultaneously. Besides having the user-data interaction as in CAVE5D, it also provides a user - user interaction between multiple participants, each running their separate instance of the visualization session.

The CAVERNSoft programming environment is a systematic solution to the challenges of building tele-immersive environments[3,4,5]. The existing functionality of CAVERNSoft is too extensive to describe here (see http://www.evl.uic.edu/cavern for details). Briefly, this framework employs an Information Request Broker (IRB) as the nucleus of all CAVERN-based client and server applications. An IRB is an autonomous repository of persistent data managed by a database, and accessible by a variety of networking interfaces. This hybrid system combines the distributed shared memory model with active database technology and real-time networking technology under a unified interface to allow the construction of arbitrary topologies of interconnected, tele-immersed participants.

3.1 Collaboration in CAVE6D

An instance of collaboration in CAVE6D has the following characteristics

Fig 2. A typical collaborative session involving 4 users in the Chesapeake Bay.

Fig. 3.a. - User 1 working independently

Fig. 3b. User 2 - Working independently.

Fig. 3.c. - User 1 - shares User

2's perspective for the Fig. 3.d. User 2 presses

the Global switch for the Temp.

Slice.

Temperature Slice.

Figs. 3. Using the global switch - Figure 3.a shows the Temperature Slices over the Rockies, but when the second user makes the Temperature slice global ( 3.d) the slices are synchronized with the second user?s setting, and move to the east coast.

4. Implementation

As mentioned earlier, CAVE5D, a non-collaborative application was retrofitted with TeleImmersive capabilities using CAVERNSoft, to produce CAVE6D. CAVERNSoft employs the Information Request Broker (IRB) (Figure 5) which provides a unified interface to all the networking and database needs of the collaborative environment to support the distribution of data across the clients. Each Client/Server has its own 'personal' IRB, which is used to cache data retrieved form the other IRBs. Creating a communication channel, between the personal IRB and the remote IRB and declaring its properties does this. Once the channel is set up, a number of keys can be created across the channel, and linked together by giving them a unique identification. A Key acts as a sort of a handle to the storage location in the IRB's database. Each key can be assigned to a specific data to be transmitted across the network. These can then be set to trigger a callback whenever they get filled by some data, which can then transmit the data to the remote key through the link. This way the personal IRB transparently manages data sharing with the remote subscribers. Any information received by a key is automatically propagated to all the other linked keys.

An IRB Interface present between an IRB and the application provides a tightly coupled mechanism to automatically transfer messages between the two. In Cave5D however, this idea could not be properly exploited due to a conflict in the integration of CAVE5D and CAVERNSoft. CAVERNSoft is based on pthreads (the POSIX threading model) which are not supported by the 'sproc' model of threading, as used in CAVE5D. Thus the IRB interface could not be embedded directly into CAVE5D. Instead a shared memory arena was used between them (Figure 6). Two shared memory arenas are created for each location. The IRB writes the data it gets from the remote IRB on one of them, which is read by the local CAVE6D application, which writes its data onto the other shared memory block, for the IRB to read.

The application writes data into the shared memory at a particular frequency, which could keep pace with the CAVERNSoft IRB reading speed (in the current implementation it writes once in 5 computations). The IRB stores the information into the corresponding keys. The IRB then updates the respective keys at the remote end. The frequency at which the IRB sends information across the network is governed by a lot of factors such as the network conditions, and the local processor power. For slow networks / processors the IRB should send information at a lower rate.

The content of the information sent across the network is as shown in Figure 7 Each instance of the application maintains a list of all the active avatars present in the environment, and stores their data. It sends the packet that has its own state information and receives such packets from all the active avatars. This way it updates its list every time it receives a packet from an avatar. The data packet consists of:

Id - identifies the packet,

Tracker co-ordinates are used to draw the avatar.

Time stamp values help to synchronize the simulation at each stamp. The values received by the remote locations is compared with the time of the local clock, and set to the highest of them. This methodology makes the slower one catch up with the others every time stamp, and prevents the faster to go back in time to synchronize with the slower ones.

States of the buttons indicates if a particular global/local switch button is turned on, and if it is then make the corresponding graphical parameter synchronized.

This synchronization is done by using the Location of the parameters, for the participant who is moving, and making it the same at all the instances of the application, across the network. The application then reads the data corresponding to this location (grid location) and displays it.

It is to be noted that the current state values are passed to the remote instances of the application, rather than the events. For example instead of sending one move event, when an object moves, the current position co-ordinates are sent continuously, so that new participants can join the session anytime, and still be in synch with the others.

An effective locking mechanism is needed to deal with the parameters when in the global state. In global state, all the immersed participants can manipulate them equally, but two people should not be able to do that at the same time. Strange results would be seen if they try to move a global parameter in the opposite direction at the same time. In order to avoid such a situation CAVE6D temporarily gives the lock to the user who started moving it first, and then takes the lock back as soon as the move event is over.

The color of the avatar is decided at run time, if the user does not specify it. At the start of the application it waits to see who all are there in the environment( by just receiving the packets and not sending them), and based on that it assigns an unused avatar color to itself.

A Shared Centralized Network topology is used in CAVE6D, wherein all the clients connect to a server. All the client IRBs invoke a link to send the information to this central server IRB. Each client links a key to the central server's key, the latter being capable of accepting links from all the clients. Each client IRB transmits continuous packets of data of the local state information to the server, at constant small time intervals. The server on the other hand, receives all these packets, and each time it receives such a packet it sends this packet to all the connected clients. It also sends its own local information, at constant time intervals like the other clients. In this way, each client gets information of all the other connected remote clients.

Both TCP and UDP protocols are used. A TCP channel is used to pass data such as time stamp, and the button states, as they are very crucial, and none of the packets should be lost. A loss of a time stamp value, could cause the simulation to jump a valuable step, or a missing button state value might cause to lose a global / local switch event. The tracker values and the parameter state values can be sent however through the UDP channel to save overhead. These values if lost would not cause a big damage. The following packet would restore the state of the parameters and the avatars, even if an earlier one is lost.

5. Observations

CAVE6D has been demonstrated at a number of conferences (including NGI, Internet 2, Alliance?98, HPDC? 98, SuperComputing?98 etc.), where collaborative demos were carried out between the exhibit floor and a number of remote locations. Besides these, other various collaborative sessions have been conducted using CAVE6D, which produced some interesting observations about how people used the application and its features, which kinds of existing collaborative abilities they liked, and what more they expected in such a collaborative visualization session. These provide valuable suggestions to the design of a collaborative visualization application. Some of them are enumerated below. Generally there were 2 kinds of scenarios - one in which a member of the session is a teacher or a guide, and the others are students or tourists; the other scenario was where the participants are closely related in their knowledge of the environment, and are performing an exploration of the data, and collaboratively correlating what they see in the environment.

6. Closing Remarks

Vizualization in the modern world is a collaborative activity. Groups separated by large geographical distances no longer need to collect at the same location to do that. High speed networks and TlE technologies enable them to get together remotely and be immersed together in the same data environment. Cave5D and Cave6D is currently distributed and supported at the Old Dominion University's Virtual Environments Lab (http://www.ccpo.odu.edu/ 6#6cave5d.) The CAVE library which allows Cave5D and Cave6D to be run on CAVEs, ImmersaDesks as well as non-VR desktop workstations, may be obtained by contacting: http://www.vrco.com. CAVERNSoft may be obtained from http://www.evl.uic.edu/cavern

Future work will expand Cave6D's abilities to include the ability to dynamically download large data-sets from central or distributed servers. In addition, work is being done to integrate a number of other desirable features such as recording of the collaborative sessions, persistence, run time configuration capabilities, and improvements on interfaces and other desirable features mentioned in the paper.

[10]. Vijendra Jaiswal

CAVEvis: Distributed Real-Time Visualization

of Time Varying Scalar and Vector Fields Using the CAVE Virtual Reality

System.

Proceedings of IEEE Visualization 1997.