Yu-Chung Chen, Dmitri Svistula, Ratko Jagodic

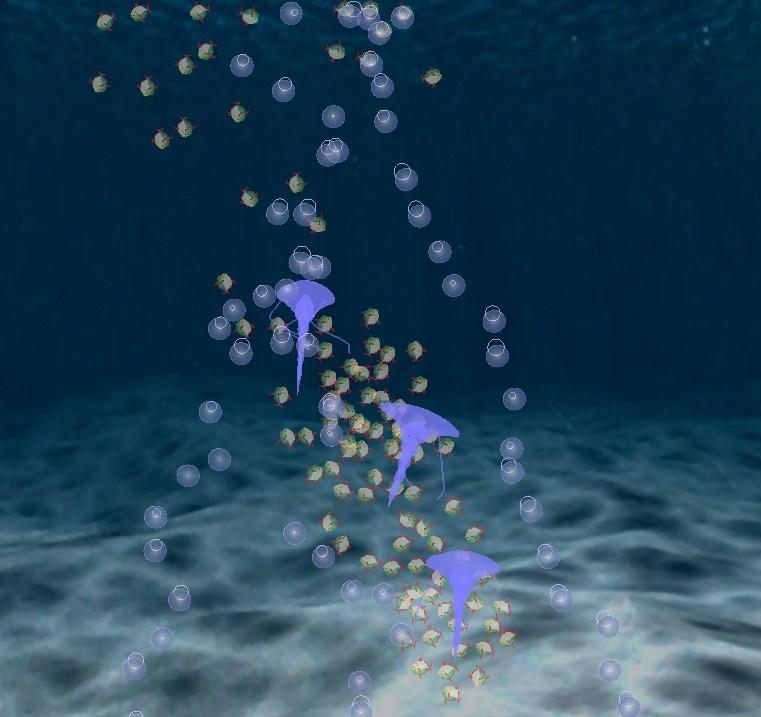

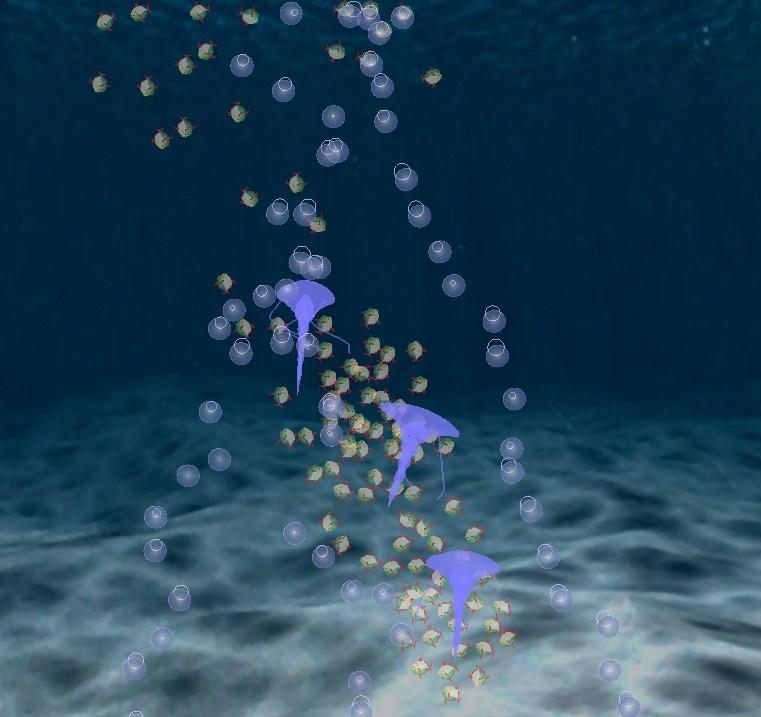

The Dancing Jellyfish Idea

The main theme are jellyfish dancing to the music. The scene is obviously placed underwater and to make things more interesting we added bubbles that move in a boid like fashion. The starting point was based on Dmitri's scenegraph framework and project two boid code. The motion capture data directly influences the motion of the jellyfish but each jellyfish in turn loosely guides a group of bubbles that follow the main three flocking rules. The chosen music is "The Thieving Magpie (Abridged)" from the Clockwork Orange Soundtrack.

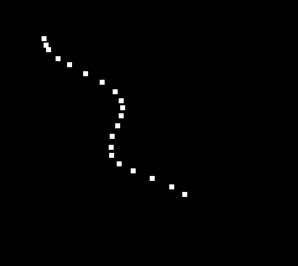

Motion Capture Process

We tried using the default motion capture program that represents each tracker by its sensor ID. However, due to the nature of the motion in our animation, we found it difficult to picture the final motion of our characters. Therefore we modified the motion capture program to display a trail behind each sensor as this was more important than knowing the sensor ID. Another important piece of information we got from that is the speed at which we were moving the sensors. For example, if the sensor was moving fast, the blocks composing its trail would be further apart and vice versa. The image below show what sensor movement might look like:

Animation

y = A * cos ( B * x + C) or

y = A * sin ( B * x + C)

At absolute rest, the motion of tentacles is defined by either sin or cos functions where A is the amplitude, B is the frequency, and C is phase shift of the curve. Animation is achieved by shifting the curve continuously and looking at a portion of the curve. Because tentacles should look like they are fixed at a point on the body, the amplitude increases linearly through the tentacle. This way, the points where tentacles are attached do not appear to wiggle. Frequency changes dynamically and it's calculated from the current velocity vector defined as the difference between last and current position. If jellyfish is moving up, the frequency is based on the magnitude of the velocity vector. When it goes does down, frequency is a fixed number and tentacles look more at rest. This makes jellyfish look like sinking. Movement of the body creates distortions in the tentacles. This is achieved by adding the positional curve with either sin or cos curves.

Each boid is

texture-mapped with a round bubble image plus a white circle moving

with a slight displacement from the center point of the boid based on

its velocity, to make the illusion of a bubble. The bubbles try to follow nearby jellyfish but since they are not in pre-defined flocks they can go from following one jellyfish to following another one.

Code & Downloads

Since the starting code was written in C++ and OpenGL we stayed with that.

Download source.

Download binary (mac).

Nothing yet.Questions & Answers

References