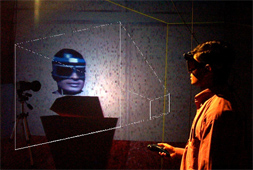

Figure 1: 3D model of the users' head with the projected video texture

Representing human users in virtual environments has always been a challenging problem in collaborative virtual reality applications. Earlier avatar representations use various techniques such as real-time animation of the users' facial features, or green/blue screen chroma-keying, or stereo-based methods to retrieve the users' video cutout. The effort in obtaining a good degree of realism was enormous and was dependent on constrained lighting and background to extract the cutout of the user. These methods were hence not applicable for situations outside of controlled studio environments or in virtual environments like the CAVE™ that are inherently dark.

This paper presents how view-dependent texture mapping [1] can be used to produce realistic avatars and eliminating in the process, constraints posed by background and lighting requirements. A two-step approach is taken to achieve realistic 3D video avatars using projective texture mapping of video.

As a first step an accurate 3D model of the users' head is obtained using a Cyberware laser scanner. This model is used as the head of the avatar and the head tracking in the CAVE controls its motion. The coordinate system of the model and the tracker sensor element is aligned manually.

The camera that captures the video of the user is calibrated in the CAVE/tracker space using the method suggested by Tsai [2]. The tracking in the CAVE is exploited to calibrate the camera. Hence the accuracy of the calibration is dependent on the accuracy of the tracking system. With the hybrid tracking systems from Intersense, the accuracy of the tracking and hence the calibration is very good. The registration of the video image with the head model controls the realism of the final 3D avatar. The registration is not perfect when the user moves his lips (since the model of head is static). This error in registration is not very noticeable and thereby we can capture the lip movement of the user.

The camera is calibrated by tracking an LED in the tracker's coordinate system with its corresponding location on the image. Tracking the LED on the image is easily done using simple computer vision techniques to locate the point of high saturation and hue in the red region. The intrinsic and extrinsic properties of the camera obtained from the camera calibration are used to fix the camera in virtual space as a virtual projector. Since the head model is a reasonably accurate geometric model of the users' head we can project the video (obtained from the camera) through the virtual projector to obtain a realistic avatar. The need for segmentation of the head from the background is eliminated since the video is projected only on to the head model. This can be implemented efficiently in real-time, as projective texture mapping is a feature commonly available in most polygon graphics hardware [3].

Transferring whole frames of video can be extremely network intensive. By sending only the rectangular portion corresponding to a bounding box of video containing the users' head, the network bandwidth requirements are reduced considerably. The coordinates of this portion can be computed by projecting the corners of the bounding box of the users' head model onto the video image using the camera parameters obtained. Compressing this rectangular portion reduces the bandwidth further.

Using a single camera, the portion of the head facing away from the camera is not texture mapped. To overcome this problem we use another camera that captures the video of the person from another angle and the video is projected onto the head model from the viewpoint corresponding to the second camera.

The result of the aforementioned method is a realistic video avatar that can be easily used in virtual environments like the CAVE.