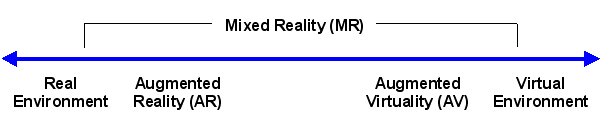

'Virtual

Reality' and 'Augmented Reality' buzzwords that can mean a lot

of different things depending on who you talk to, and the line

between them can be blurred.

There have

been various ways of of trying to create scales or continuums

from fully real worlds to fully virtual worlds - https://en.wikipedia.org/wiki/Reality%E2%80%93virtuality_continuum

in general

looking something like this:

- real

environment - you are completely immersed in the natural

world with minimal access to any synthetic worlds (e.g. your

smartphone)

- augmented reality - you out in the real world with

some gadgets (phone, headset) that allow you to experience the

real world and a synthetic world simultaneously where the tech

could range from a watch to a smart phone to a see through head

mounted display.

- virtual reality - you are completely immersed in a

synthetic world with minimal access to the real world

There is a

large grey area of 'mixed reality' that covers most of the space

from purely real to purely virtual where we spend most of our

lives. I may be walking down the street looking at a set of

directions on my smartphone, or I may be sitting on my couch

with some chips and a drink playing a video game on a big TV.

Lets take a

look at the different elements of Virtual Reality, and how

technology, in particular video games, have been moving us

closer and closer to achieving it.

We are all

pretty familiar with the 'pure reality' side of the spectrum so

lets start with Virtual Reality

The key element to virtual reality is immersion ... the sense of being surrounded.

A good novel is immersive without any fancy graphics or audio hardware. You 'see' and 'hear' and 'touch' and 'taste' and 'smell'

A good play

or a film or an opera can be immersive using only sight and

sound.

But they

aren't interactive which is another key

element.

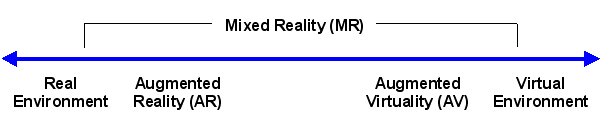

The

children's 'Choose Your Own Adventure' books in the late 70s

added limited interaction to books giving the reader a handful

of choices every few pages that would lead to 40 endings in the

case of the first book 'The Cave of Time', but it was computers

that would run with this concept.

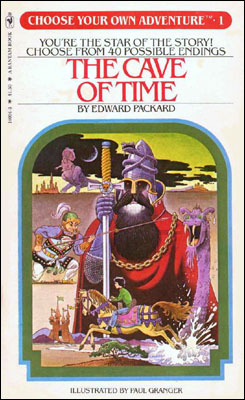

Here is a

sample page (Image from https://pdfcookie.com/documents)

(and if you

wish more 'serious' literary context on the topic, please see https://en.wikipedia.org/wiki/Hypertext_fiction

for several very serious pieces of literature with similar

methods)

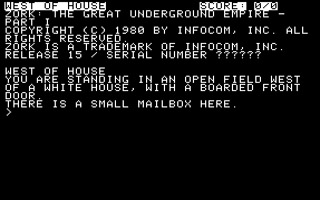

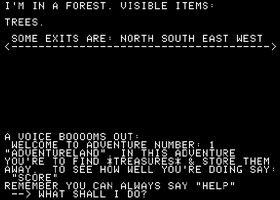

Older textual

computer games from the late 70s and early 80s such as

Adventure

(https://en.wikipedia.org/wiki/Colossal_Cave_Adventure), Zork

https://en.wikipedia.org/wiki/Zork, and the Scott Adams (not the

Dilbert guy) adventures are immersive and interactive and place

the user within a computer generated world, though that

world was created only through text. You can play adventure

online at http://www.astrodragon.com/zplet/advent.html. You can

play the personal computer version of Zork online at

http://textadventures.co.uk/games/view/5zyoqrsugeopel3ffhz_vq/zork.

The Scott Adams adventures are playable at

http://www.freearcade.com/Zplet.jav/Scottadams.html

video: https://www.youtube.com/watch_popup?v=TNN4VPlRBJ8

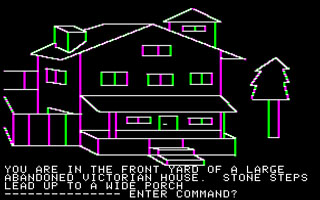

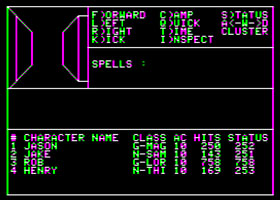

Games in the

early 80s started to incorporate primitive computer graphics visuals

to go along with the text, such as Mystery House (1980) below.

video: https://www.youtube.com/watch_popup?v=asOhTnQv8PE

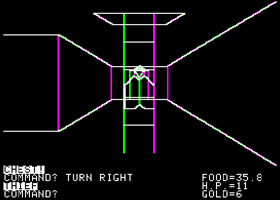

and even

simple 1st person graphics in games such as Akalabeth (1980) and

Wizardry (1981), though the screen refresh rate was something

less than real-time. The screen took a long time (up to

several seconds) to re-draw so these games tended to be more

strategy-based on a turn-taking model.

video: https://www.youtube.com/watch_popup?v=P0jSh_MKM1M

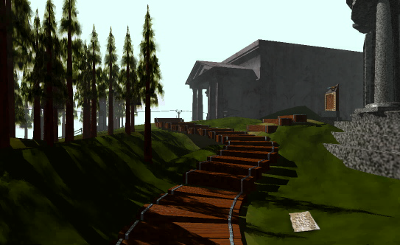

Myst in 1993

took the visuals to a whole new level using CD-ROM storage for

all of its (for the time) very realistic imagery, though you

could only move between a set of fixed locations and viewpoints

and use the mouse to click on objects to interact with them - https://www.youtube.com/watch_popup?v=4xEhJbeho7Q

Moving on towards more modern computer games, they are immersive

and interactive. These also have the advantage of being real-time

running at 30+ frames per second, another key element.

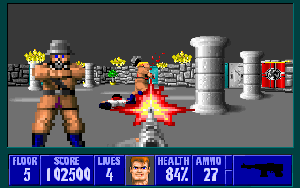

Another key

element of VR is a viewer centered perspective

where you 'see' through your own eyes as you move through a

computer generated space. Akalabeth, Wizardry, and Myst were

first person view games, though you could only look where the

game allowed you to look. Modern first person shooters and other

games use this view as you move through a virtual world and

interact with objects there, and more often than not kill

everyone you meet. The way you see the environment is limited to

a screen with a narrow angle of view and you use a keyboard /

joystick / gamepad to change your view of that scene, and

interact.

One of the

most successful early ones was Wolfenstein 3D from 1992 - https://www.youtube.com/watch_popup?v=NdcnQISuF_Y

(image from Wikipedia)

Of course as time went on the visuals became better and some

would stick with a first person perspective and others using a

third person perspective to better show what was going on around

the player.

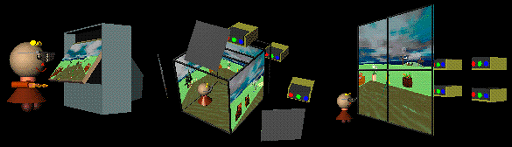

First person or viewer centered perspective on the left vs third

person perspective on the right from the Jedi Knight series from

the 1990s.

VR adds the concepts of head tracking, wide field of view and stereo vision

Head tracking allows the user to look around the computer generated world by naturally moving his/her head. A wide field of view allows the computer generated world to fill the user's vision. Stereo vision gives extra cues to depth when objects in the computer generated world are within a few feet.

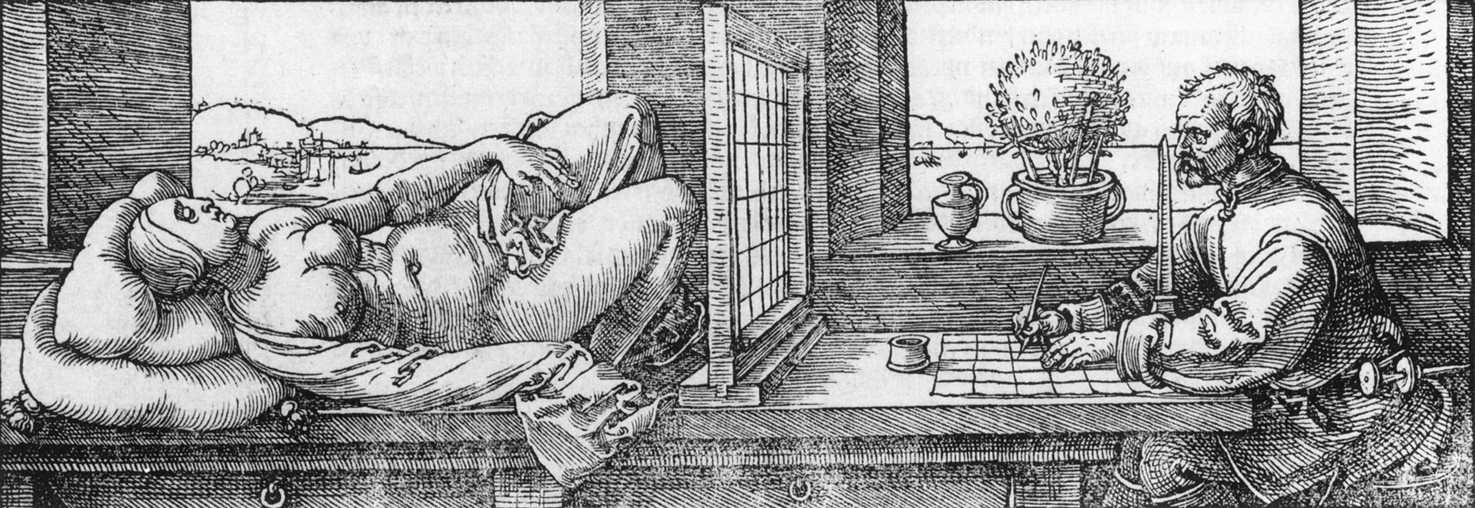

As Dan

Sandin, original co-director of evl likes to say, VR gives us

the first re-definition of perspective since the Renaissance in

the 16th century. Instead of having a fixed point for the viewer

as denoted by the obelisk, the user is free to move around with

perspective changing as he/she does so.

Albrecht Dürer -

Draughsman Drawing a Recumbent Woman (1525) Woodcut illusion

from 'The Teaching of Measurements.'

Natural

interaction is also important in VR. If you

want to reach out and touch a virtual object then tracking the

users hands lets the user do that, rather than using a keyboard

or gamepad to 'tell' your virtual representation to interact.

One of the

best examples of this from the current crop of VR games is Job

Simulator, giving you a small environment to walk around in such

as a cubicle with a lot of different things to interact with

using both hands.

https://www.youtube.com/watch_popup?v=QRpL6gRbQO8

Audio

also plays a very important role in immersion (try listening to

a modern Hollywood film without its musical score) and haptic

(touch) feedback can provide important cues while in smaller

immersive spaces.

And there is some work in trying to deal with smell (the HITLab in the late 90s, and Yasuyuki Yanagi, Advanced Telecommunications Research Institute, Kyoto more recently) and taste (Hiroo Iwata, University of Tsukuba.)

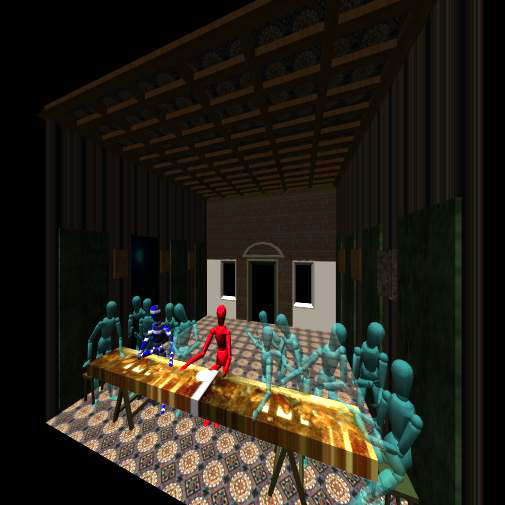

So here is a

picture (from a video) that puts a lot of this together in a

more serious use of VR ... Randy Smith of General Motors in

their CAVE in the mid 1990s looking at different instrument

cluster layouts for cars GM is designing. Randy is real. The car

seat Randy is sitting in is real. The rest is computer

generated, including the remote passenger joining Randy from

another location. If you have been in an automobile, truck, or

airplane built since the mid 1990s, VR was used as part of its

design process.

In this case

the camera taking the photo is tracked so perspective is correct

for us, the viewer. Note that the crew of 'The Mandalorean'

would rediscover this technique 25 years later to integrate

actors into their virtual sets.

Augmented Reality has a very similar feature set but whereas Virtual Reality usually is set up in controlled settings - typically indoors within a fixed space where you can set up and calibrate tracking systems, and you have access to power for the computers to drive the graphics), augmented reality usually takes place out in the real world where tracking is less accurate, electrical power needs to be portable, and computational power needs to be portable.

Augmented reality has the

additional constraint that the synthetic world it is creating

must match up with the real world in real-time.

Better

batteries help with making power more available, and access to

local phones that have access to cloud computing resources can

help offload the weight and the computation, but accurate

tracking is still difficult. In some minimal levels of AR where

I want to know the weather, I probably only need accuracy down

to the city level, if I want to know where is the closest coffee

shop then I need accuracy down to the block level, if I want to

see what power lines are running under the street or the names

of the people who are walking past me then I need much more

accuracy and access to data at a much faster rate.

The

idea of creating virtual or augmented realities isn't new, and

the desire to experience these immersive worlds isn't new.

1580s - Giambattista della Porta develops the 'Pepper's Ghost' illusion - it is rediscovered in 1862 by Henry Dirks and John Pepper. It has recently been rediscovered yet again to produce 'holographic' concert performances link and a short video with some history and explanations here

1793 - Fixed 360 degree

Panoramas painted on the walls - Robert Barker in Leicester

Square, London - link

(Image from the British Library)

1840s - Moving Panoramas painted on large rolled cotton sheets -

John Banvard's Mississippi Panoramas - 3.6m (12 feet) high and

800m (2600 ft) long - link

A smaller one by John Egan

was 7.5' high and 348' long survives (think of a 185" diagonal

TV back in the 1840s)

with a video recreation at https://www.youtube.com/watch_popup?time_continue=25&v=uoqVfDEm5Rk

There was a 50 foot (15 m) tall and 400 foot (122 m) long

'cyclorama' of the 1871 Chicago fire installed downtown from

1892 to 1893.

https://chicagology.com/chicago-fire/fire031/

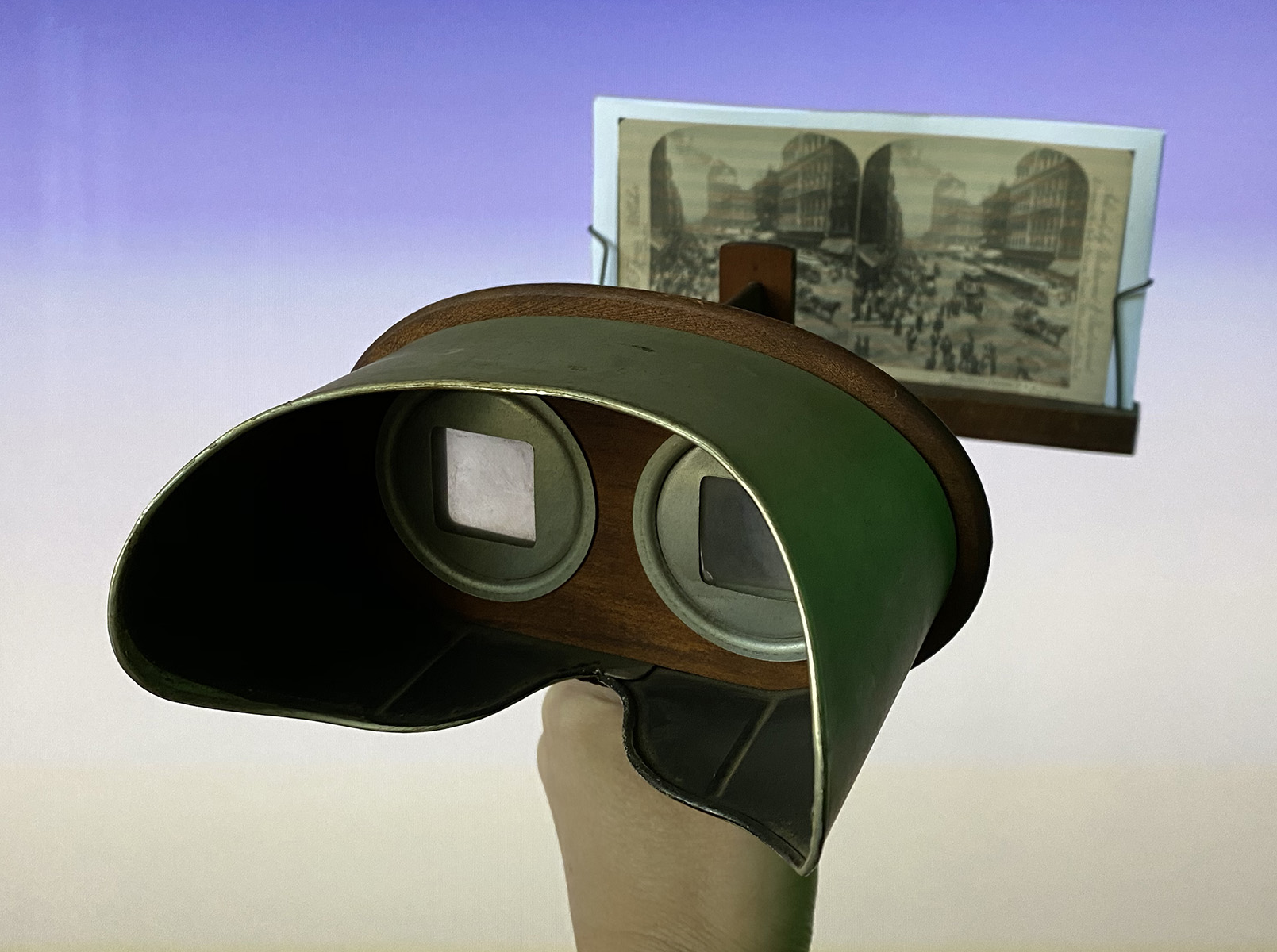

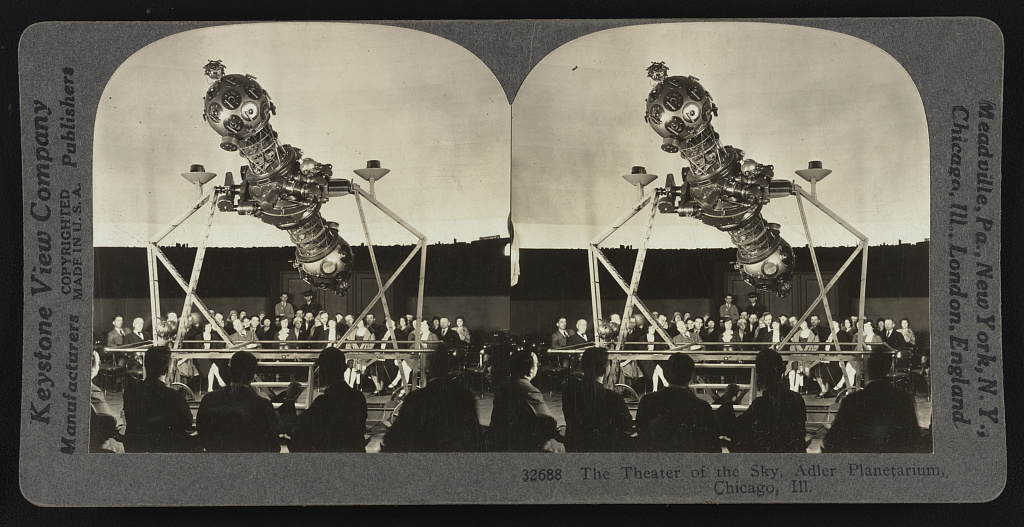

1800ds - Stereoscope - https://en.wikipedia.org/wiki/Stereoscope

The US Library of Congress

has a nice collection of stereographs including quite a number

of them taken in Chicago - https://www.loc.gov/photos/?fa=online-format:image%7Cpartof:stereograph+cards&q=stereograph

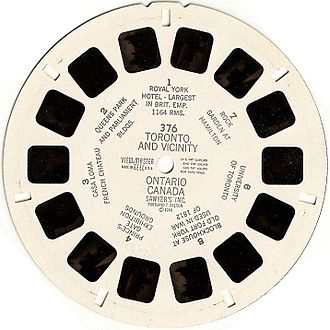

The stereoscope viewed single

pairs of images mounted on cards (sometimes glass) that could be

swapped in and out. The View-Master used discs containing 7 sets

of paired slid images where the viewer could use a lever on the

side to cycle between the images without needing to take their

eyes out of the viewer.

(Stereoscope image from the US library of Congress. View-Master

Reel from https://en.wikipedia.org/wiki/View-Master)

The

2010 version (my3D) that used an iPhone as the display device

allowing content to be delivered through an app from over the

web, including 3D video, and audio. The device included finger

holes to interact, and a hole for the iPhone's camera. Google

would use a similar idea in 2014 to make google Cardboard.

Literature

would quickly start to see the possibilities ...

1901 - The Master Key: An Electrical Fairy Tale, Founded Upon the Mysteries of Electricity and the Optimism of Its Devotees by L Frank Baum (who had published the Wonderful Wizard of Oz the year before) proposed a set of 'character marker' eyeglasses that, when you looked at a person, showed the character of that person overlaid on their forehead (G for good, K for kind, etc.)

http://www.gutenberg.org/files/436/436-h/436-h.htm

1935 - Pygmalion's Spectacles by Stanley Weinbaum describe a pair of spectacles which give a virtual reality experience: "But listen - a movie that gives one sight and sound. Suppose now I add taste, smell, even touch, if your interest is taken by the story. Suppose I make it so that you are in the story, you speak to the shadows, and the shadows reply, and instead of being on a screen, the story is all about you, and you are in it." and also VR's common critique ""Fools! I bring it here to sell to Westman, the camera people, and what do they say? 'It isn't clear. Only one person can use it at a time. It's too expensive.' Fools! Fools!"

http://www.gutenberg.org/files/22893/22893-h/22893-h.htm1950 - The Veldt short story by Ray Bradbury contains an educational nursery able to recreate any place

1955

- Circarama as part of Tommorowland at Disneyland with 11

projectors showing a 360 degree film captured from 11 cameras,

which were sometimes mounted on a car - basically what google

does for streetview today, but back in the 1950s with film

cameras.

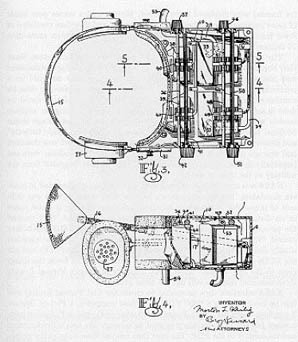

1960 - Morton Helig

Sensorama - https://www.youtube.com/watch_popup?v=vSINEBZNCks

(image from http://www.mortonheilig.com/InventorVR.html)

patent for first HMD - the

Telesphere Mask - for 3D video

(image from http://accad.osu.edu/~waynec/history/lesson17.html)

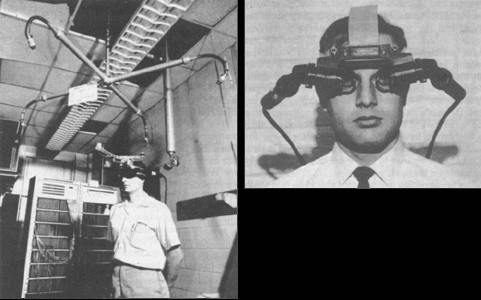

1965 - Ivan Sutherland - University of Utah

1966 - Ivan Sutherland

(image from

http://accad.osu.edu/~waynec/history/tree/images/hmd.JPG)

1967 - Fred Brooks - University of North Carolina

1973 - The Recreation Room

(later called the Holodeck) in Star Trek: the Animated Series

mid 70s - mid 80s Myron Krueger

(image from http://resumbrae.com/ub/dms424/05/01.html)

1977 - Richard Sayre, Dan Sandin, Tom DeFanti - UIC

1979 - Eric Howlett

1982 - Thomas Furness III

1984 - Michael McGreevy and friends

1985 - Jaron Lanier - VPL research

1986 - Kazuo Yoshinaka - NEC

1989 - Autodesk

1989 - Fake Space Labs

(image from: http://www.fakespacelabs.com/tools.html)

1990 a nice short BBC segment from 1990 on the state of the art at the time - https://www.youtube.com/watch?v=T2CYLlSn1gA

1991 - Virtuality -

https://en.wikipedia.org/wiki/Virtuality_(gaming)

1992 - Carolina Cruz Niera, Dan Sandin, Tom DeFanti, et al -

Electronic Visualization Laboratory, UIC

1992 Tom Caudell - Boeing

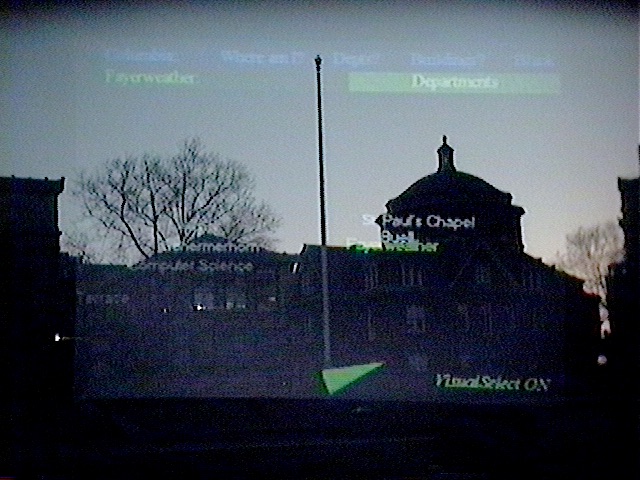

1992 Steve Feiner and

friends - Columbia University

1993 - GMD - German National Research Center for

Information Technology

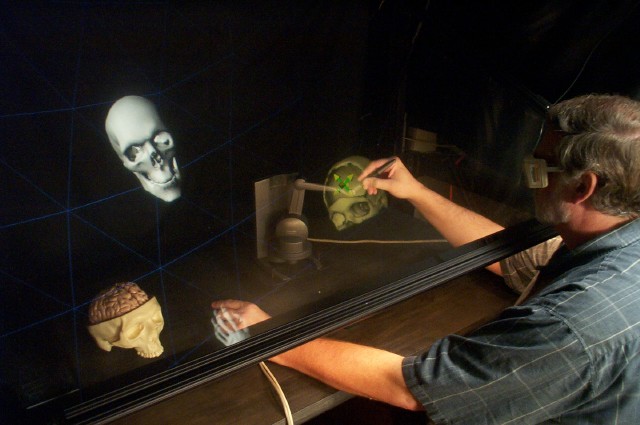

1993 - SensAble Technology

Mid 90s - Steve Mann - MIT

1996 - Maurice Benayoun - Ars Electronica

1998 - TAN / Royal Institute of Technology in Stockholm

1998 - Electronic

Visualization Laboratory, UIC

1999 - Mark Billinghurst - HITLab at University of Washington

2000 - Hong Hua - University of Arizona

2013 - Google Glass

2014 - Oculus / Vive / Gear

2014 - Google Cardboard

2016 - Microsoft

2017 - Dell, Asus and

others release their Microsoft mixed reality based headsets

($300) with 'inside out' tracking where the hand-held

controllers are tracked from the HMD with mixed success

https://www.theverge.com/2017/8/28/16202464/dell-visor-windows-mixed-reality-headset-pricing-release-announced

https://www.engadget.com/2017/09/03/asus-mixed-reality-headset-ifa-2017/

https://techcrunch.com/2017/08/28/microsofts-mixed-reality-headsets-are-a-bit-of-a-mixed-bag/

2018 - Magic Leap AR display

Dev Kits released ($2,300 with no PC or tether).

https://www.magicleap.com/

https://www.youtube.com/watch_popup?v=Vrq2akzdFq8

2019 - Oculus releases Oculus Quest as a $400 stand-alone device using smart phone level technology with 'inside out' tracking and two controllers, that is somewhat under-powered but works really well and is very easy to set up. In 2020 Oculus Quest 2 (now Meta Quest 2) was released as a more powerful version of the Quest with improved graphics and hand tracking, which would again be updated in 2023 as the Meta Quest 3.

2024? Apple is expected to

release their Apple Vision Pro AR headset.

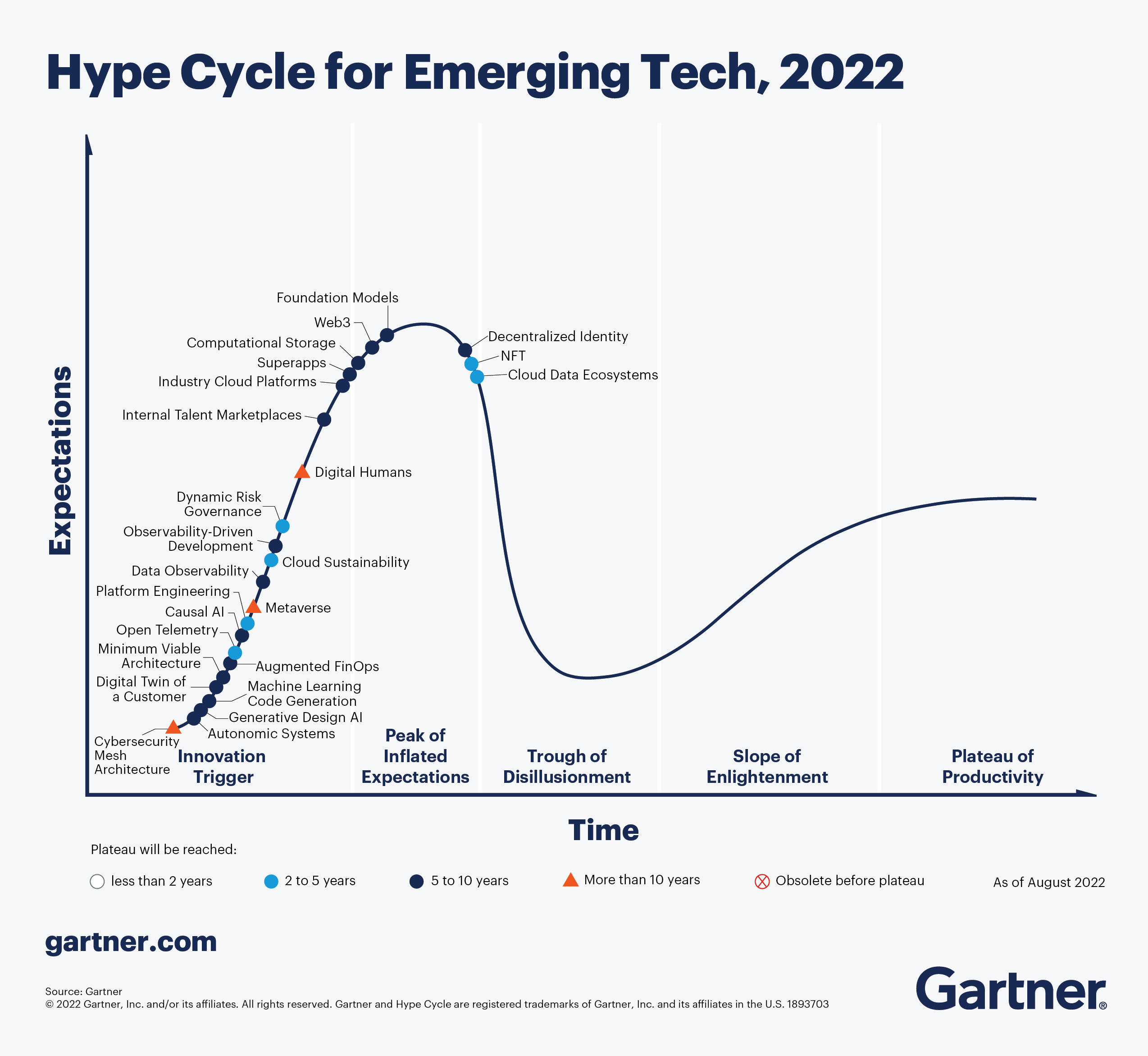

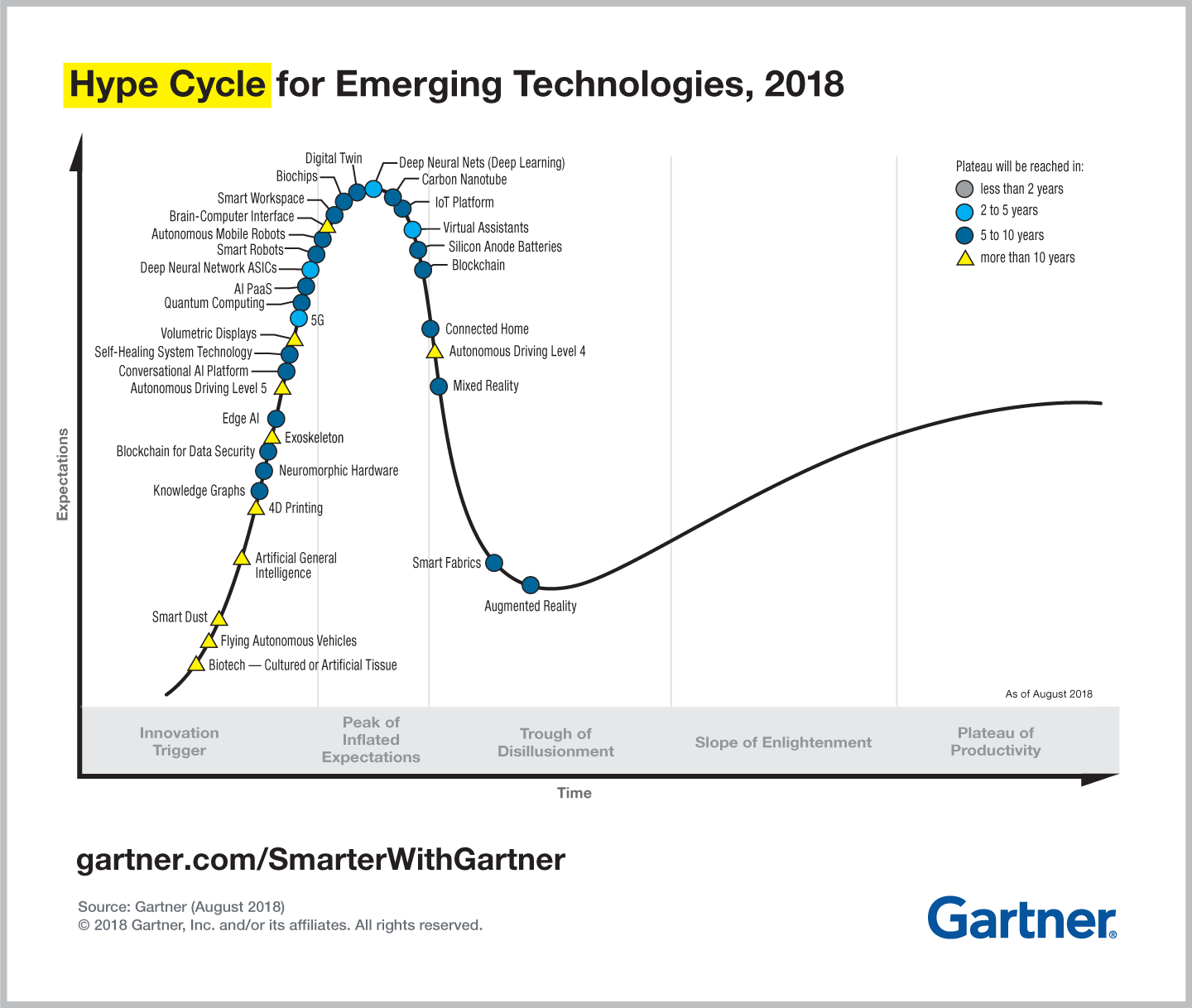

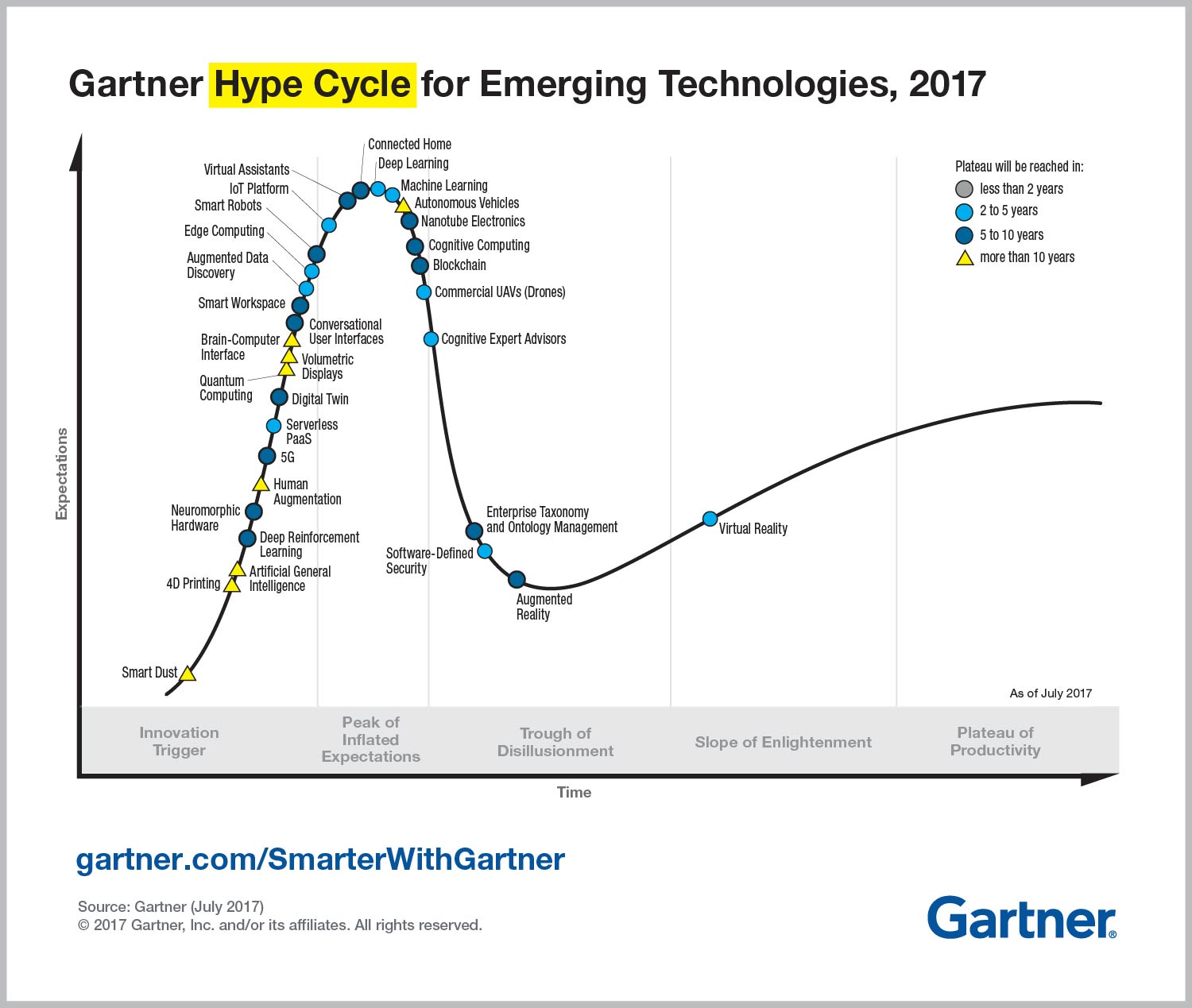

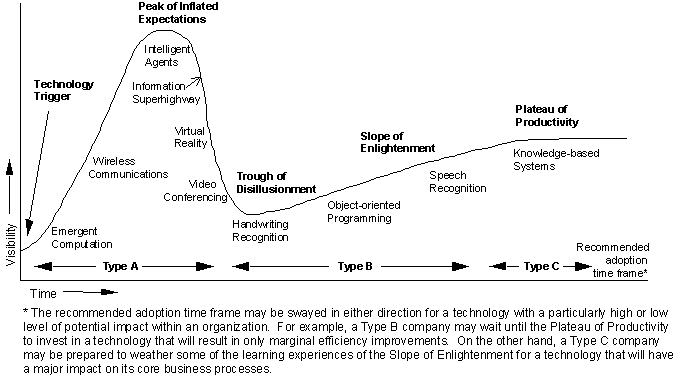

VR has gone through several hype phases with the biggest being in the mid 80s and mid 90s. With the release of low cost headsets we are in the midst of another VR hype phase, now termed 'the Metaverse', while AR is in its first hype phase.

As of 2019

both VR and AR have made it past the Trough of Disillusionment

and can be considered 'mature' technologies.

As of 2018

Virtual Reality made it onto the plateau of productivity, but AR

was still deep in the trough.

while in 2017 VR was still on

the slope of enlightenment and AR was headed into the depths of

the trough.

(image from

https://www.gartner.com/smarterwithgartner/5-trends-emerge-in-gartner-hype-cycle-for-emerging-technologies-2018/)

back in 1995, when the first

of these charts came out, VR was just sliding down into the

trough of Disillusionment

(image from

https://www.gartner.com/doc/484424/gartners-hype-cycle-special-report#1169528434)

For large format based systems, some companies that sell these things are:

For Head Mounted Displays, the previous generation of $10,000 - $20,000 displays by companies like NVIS have mostly been supplanted by a new generation of low cost sub $1000 gaming-related displays:

Instead of totally isolating

the user from the real world, Augmented Reality displays overlay

computer graphics onto the real world with devices like Google Glass and the Microsoft HoloLens and the Magic Leap

and there are other

interesting solutions that have been in development for a couple

decades such as the Virtual Retinal Display

and to some extent your

smartphone or tablet with GPS, camera, and a Gyroscope already

acts as an AR display.

There

is quite a bit of work going on in various research labs in

VR. New devices are being created, new application areas being

worked on, new interaction techniques being explored, and user

studies being performed to see if any of these are valuable.

What is much harder is getting the technology and the

applications out of the research lab and into real use at other

sites - getting beyond the 'demo' stage to the 'practical use'

stage is still very difficult.

I'm going to give a brief overview here and then we will go into

each of these areas in more detail in the coming weeks

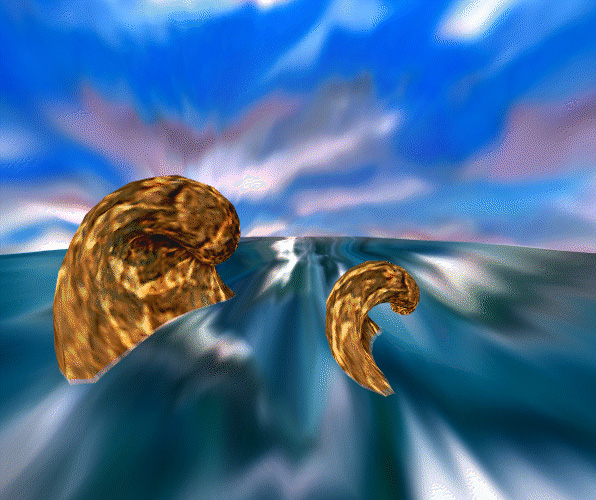

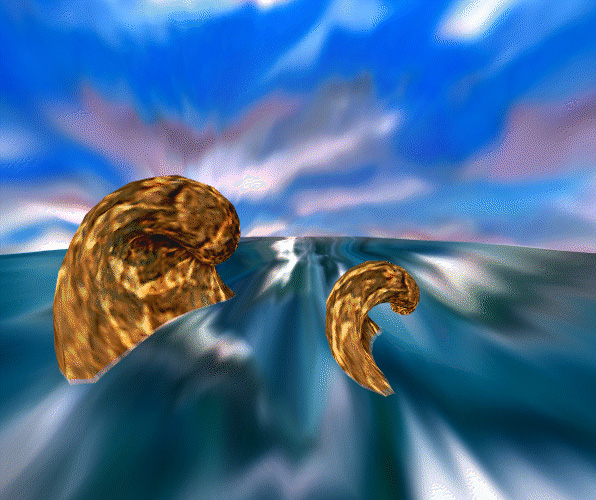

For virtual reality it is important to note that the goal is not always to recreate reality.

Computers are capable of creating very realistic images, but it can take a lot of time to do that. In VR we want at bare minimum 20 frames per second and preferably 60+ in stereo.

For comparison:

The trade off

is image quality (especially in the areas of smoothness of

polygons, anti-aliasing, lighting effects, transparency) vs

speed of rendering. In some cases, like General Motors, they

sacrifice frame rate (frames per second) for better visual

quality.

Gamers tend

to want much higher frame rates than people watching TV / Movies

/ YouTube videos.

In AR we are

typically not covering the entire field of view of the user so

the rendering requirements are lower, but there is a greater

need to do a better compositing between the real and the

synthetic (i.e. based on lighting conditions) and faster

graphics updating.

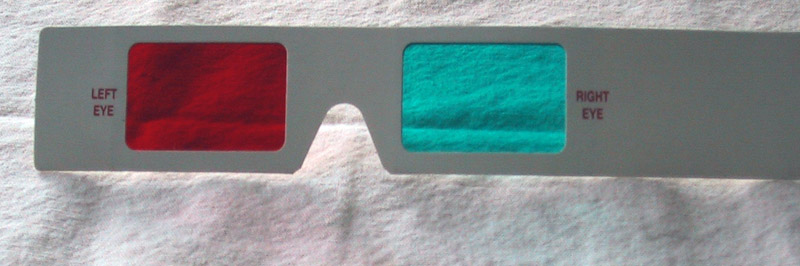

If we want stereo visuals then we need a way to show a slightly different image to each eye simultaneously. The person's brain then fuses these two images into a stereo image.

One way is to

isolate the users eyes (as in a HMD or BOOM) and feed a separate

signal to each eye using 2 display devices where each eye

watches its own independent display (as in older HMDs), or take

a single wide display and render the left and right eye views

onto the same screen and then make sure each eye can only see

its appropriate half of the display (as in current less

expensive HMDs).

Another way is to show the imagery on a larger surface and then filter which part of the image the user sees. There are several different ways to do this.

We can use

polarization (linear or circular) - polarization was used in 3D

theatrical films in the 1950s and 1980s and the current

generation. One projector is polarized in one direction to show

images for the left eye, and the other projector is polarized in

the other direction to show images for the right eye. Both

images are shown on the same screen and the user wears

lightweight glasses to disambiguate them.

This same

technology can be used on televisions by adding a polarized film

in front of the display where even lines are polarized in one

direction and odd lines are polarized in the other direction.

The user only sees half of the resolution of the display with

each eye. This is the technology we use in CAVE2.

We can use

colour - this has been done for cheaper presentation of 3D

theatrical films since the 50s with red and blue (cyan) glasses

as you only need a single projector, or a standard TV. It

doesn't work well with colour and is somewhat headache inducing

after an hour.

We can use time - this was common in VR in the 90s and the 00s as in the original CAVE. Here we show the left eye image for a given frame then the right eye image for the same frame, then move on to the next frame. The user wears LCD shutter glasses which ensure that only the correct eye sees the correct image by going opaque on the eye that should be seeing nothing. These glasses used to cost over $1000 each in the early 90s. They were the basis for the early 3D televisions and cost around $100 per pair. Now they are down to $30 per pair.

In all these cases both of the eyes are focusing at a specific distance - wherever the screen is located. There is no way for the user to change focus and bring parts of the scene into focus and let others go out of focus as in the real world .

"people hate helmets, but people like sunglasses"

ergonomics and health issues of various displays

Typically

museums and other places with many visitors it is necessary to

either give the glasses away to the user (with the paper ones)

or wash them (with the polarizing ones) to keep things sanitary.

This is more difficult with HMDs and AR headware where people

have tried using alcohol wipes.

People

typically don't spend all day in VR but people may spend all day

in AR. VR also tend to be done in private where AR is more done

outside. AR headware has to be light and unobtrusive, but still

be able to operate. The entire computation system may be in the

headgear as well, or some may be offloaded to a smart phone or

to the cloud. Google Glass was one light solution. Headware for

bikers like the Solos http://www.solos-wearables.com/ are

another, as is the Microsoft HoloLens.

![]()

![]()

![]()

Need a computer capable of driving the display device at a fast enough rate to maintain the illusion.

In the past

(i.e. the 90s) that usually means either simple scenes, very

specialized graphics hardware, or a lot of work in optimizing

the software. But this is less true today where scenes are

getting more complex, the hardware more commonplace, and the

software more capable, mostly thanks to the video-game industry.

Benchmarks on CPUs and graphics cards aren't really very meaningful. They can give ballpark figures but there are a lot of factors that combine to give the overall speed/quality of the virtual environment.

Multiple processors are usually required, since there tend to be multiple simultaneous jobs to be performed - i.e. generating the graphics, handling the audio, synchronizing with network events.

Multiple graphics engines are pretty much required if you have multiple display surfaces

Ability to

'pipeline' the graphics is pretty much required

With a very

fast network it is possible to render the graphics remotely on a

more powerful computer and just use the local display as a

receiver.

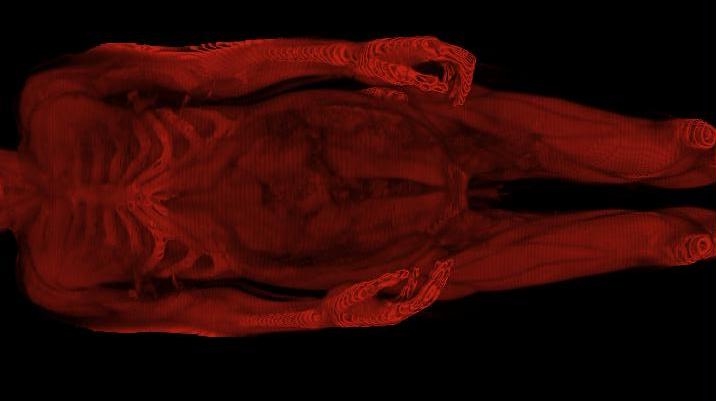

At minimum you want to track the position (x, y, z) and orientation (roll, pitch, yaw) of the user's head - 6 degrees of freedom.

You often

want to track more than that - 1 or 2 hands, legs?, full body?

How accurate

is the tracking?

How far the user can move - what size area must the tracker track?

Can line of

sight be guaranteed between the tracker and the sensors, which

is necessary in many tracking systems?

What kinds of latencies are acceptable?

Input devices are perhaps the most interesting area in VR research. While the user can move their head 'naturally' to look around, how does the user navigate through the environment or interact with the things found there?

Ambient sounds are useful to increase the believably of a space

Sounds are useful as a feedback mechanism

Important in collaborative applications to relay voice between the various participants

Spatialized 3D sound can be very useful

Often useful to network a VR world to other computers.

We need high bandwidth networking for moving large amounts of data around, but even more important that that we need Quality of Service guarantees, especially in regards to latency and even more especially related to jitter.

Photo of the classic

evl CAVE from the early 90s with 4 1-megapixel screens with

active (shutter glasses) stereo giving typically 2 megapixels to

each eye depending where you stand) , a 10' by 10' area to move,

magnetic tracking for the head and one controller. Total cost

was around $1,000,000 in 1991 dollars (about $2,000,000 in 2020

dollars) with about $500,000 of that 1991 price for the

refrigerator sized computers to drive it.

to put this hardware into

context, in 1991 we had

In 2017 the VIVE could send

roughly 1-megapixel to each eye, gives the user a similar space

to walk around in, IR camera tracking for the head and two

controllers for about $2,500 including the computer.

If you don't mind lower

quality visuals in 2019 the Oculus Quest gives the user a

similar experience for $500 with no additional hardware needed.

Vision / Visuals and Audio