The primary purpose of

tracking is to update the visual display based on the viewers

head position and orientation.

Ideally we would track the

users eyes directly, and while that is possible, it is still

currently cumbersome, and not that necessary to get a decent

experience. Instead of tracking the viewer's eyes directly, we

track the position and orientation of the user's head. From this

we determine the position and orientation of the users eyes

assuming they are looking straight ahead, which is true much of

the time.

We may also be tracking the user's hand(s), fingers, feet, legs, or handheld objects.

Want tracking to be as 'invisible' as possible to the user.

Want the user to be able to

move freely with few encumbrances

Want to be able to have multiple 'guests' nearby

Want to track as many objects as necessary

Want to have minimal delay between movement of an object (including the head and hand) and the detection of the objects new position / orientation (< 50 msec total)

Want tracking to be accurate

(1mm and 1 degree)

In

order to interact with the virtual world beyond moving through

it, we typically need to track at least one of the user's hands,

and preferably both of them. Tracking the position and

orientation of the hand allows the user to interact with the

virtual world or other users as though the user is wearing

mittens with no fine control of the fingers. When thinking about

how the user interacts with the worlds that you are building,

think about the kinds of actions a person can do while wearing

mittens.

Some

modern controllers (like with the Quest) can judge the position

of the fingers for gestures but users are still interacting

through the controller. Other modern systems like Leap Motion and the newer

Quest software allow tracking of individual fingers through

cameras giving more fine control. Cameras are nice since the

user doesnt need to hold additional items, but cameras can also

have issues with occlusion, light levels, etc. Sometimes you want to have a

controller with its more precise physical button feedback. It

all depends on the user and the application.

What

we don't want ...

![]()

| large heavy transmitter (1

cubic foot) and one or more small sensors.

transmitter emits an electromagnetic field. sensors report the strength of that field at their location to a computer sensors can be polled specifically by the computer or transmit continuously.

disadvantages are:

examples: |

|

| rigid structures with

multiple joints

one end is fixed, the other is the object being tracked could be tracking users head, or their hand physically measure the rotation about joints in the armature to compute position and orientation structure is

counter-weighted - movements are slow and smooth and

don't require much force Knowing the length of each joint and the rotation at each joint, location and orientation of the end point is easy to compute very exact values.

advantages are:

disadvantages are:

|

|

<image from https://cs.nyu.edu/~wanghua/course/multimedia/project.html>

small mobile transmitter and one medium sized fixed sensor

each transmitter emits ultrasonic pulses which are received by microphones on the sensor (usually arranged in a triangle or a series of bars)

as the pulses will reach the different microphones at slightly different times, the position and orientation of the transmitter can be determined

uses:

advantages are:

disadvantages are:

examples:

|

|

LEDs or reflective materials are placed on the object to be tracked video cameras at

fixed locations (for large spaces with multiple people)

capture the scene (usually in IR) image processing techniques are used to locate the object With fast enough processing you can also use computer vision techniques to isolate a head in the image and then use the head to find the position of the eyes advantages

disadvantages

examples

|

|

In CAVE2 we use a Vicon

optical camera tracking system, in the classroom space it is an

OptiTrack system. Both use the same markers so devices can move

easily between them.

One can also use much less

expensive camera based systems like the Xbox Kinect or

Playstation VR to track multiple people in a small area. Staying

within the field of view and focal area is very important here

since you only have a single camera, and users are usually

limited to facing a single direction

How the consumer headsets do it

| VIVE two powered lighthouses at the high corners of the tracked volume each sweeping a laser horizontally and another vertically through the space which is read by the wired headset (1000 hz) and the wireless controllers (360 hz) giving a general accuracy of 3 mm. https://www.roadtovr.com/analysis-of-valves-lighthouse-tracking-system-reveals-accuracy/ |

|

(image from HTC)

Oculus wired Headset and the wireless Oculus touch controllers have LEDs mounted all around them which are then detected by (initially) a small sensor sitting on the desk/table in front of you, and later two or three sensors to track a larger space |

|

| Playstation VR similarly has a wired headset and wireless controllers that make use of the existing PlayStation move controller infrastructure using the PlayStation Camera and LEDs on the headset |

|

Microsoft Mixed Reality

- wired headset has cameras that can look around the room to

spot open areas and surfaces to map the room (like the HoloLens)

and are also used to track the wireless controllers which have

LEDs mounted all around them.

Quest -

wireless headset that like the Microsoft Mixed Reality headsets

tracks its own controllers, but does a much better job of

continuing to track them when they are out of sight of the

headset.

Which is better depends on

the user's needs. For one person:

Fixed tracking systems like OptiTrack / Vicon or simpler systems like the VIVE's lighthouses will give better tracking within a fixed space but with the trade off of more setup time.

The more portable Oculus and Playstation cameras give a good experience within a smaller space as long as the headset and controllers are in sight of the camera.

The

mixed reality headsets are easier to move from place to place

and faster to setup since they have no external cameras. The

original mixed reality headsets needed the controller to be in

sight of the headset, which is not always the most natural place

to hold them, but newer headsets like the quest have additional

sensors in the controllers to continue tracking them when they

are out of sight of the user.

If

you have more than one person in the space then things get more

interesting since that makes it more likely that cameras can be

blocked. Having constellations of cameras (like Vicon or

Optitrack) are one way to solve the problem. Another way is

going with the mixed reality solution of each headset tracking

its own user's controllers as long as those mixed reality

headsets can agree on where each user is in the global shared

space.

Current technology is moving

towards fewer cables (usb-c) to the headsets and wireless

streaming to the headsets to further reduce the cables, or

running the experiences on the headsets themselves (though a

headset will have less graphics power than a PC with a large

modern graphics card)

self-contained

gyroscopes and accelerometers used

knowing where the object was and its change in position / orientation the device can 'know' where it now is

tend to work for limited

periods of time then drift as errors accumulate

For

outdoors work GPS can give the general location of the user (3

meter accuracy horizontally in open field, much less as you get

near buildings). Vertical accuracy is around 10 meters, so that

is not very useful right now. Newer constellations of

satellites, as well as ground based reference stations can

substantially improve on that accuracy.

For better vertical accuracy

devices are now including barometers. These work pretty well

when calibrated to the local air pressure, which may be

constantly changing as the weather changes.

Fiducial Markers

As

shown in Project 1, a common way for camera based AR systems to

orient themselves is by using fiducial markers. These could be

pieces of paper held in front of a camera where a 3D object

suddenly appears on the paper (when looking at the camera feed).

They can also be placed on walls, floors, ceilings so moving

users with cameras can locate where they are.

In

cities, AR can use large buildings as huge Fiducial markers as

they dont change very often and this can help compensate for

poor GPS within the urban canyons.

Combinations

Combining

multiple forms of tracking is a very good way to improve

tracking in complex situations, just as our phones GPS based

information is improved if we also have the WiFi antennas

working.

Intersense uses a combination on Acoustic and Inertial. Inertial can deal with fast movements and acoustic keeps the inertial from drifting

Outdoor AR devices can use

GPS and orientation / accelerometer information to get a general

idea where the user is, and then use the on board camera to

refine that information given what should be in sight from that

location at that orientation.

a current popular version of

this is Inside Out Tracking

The HoloLens,

Microsoft's Mixed Reality Headsets, and Facebook's Quest don't

want to rely on external markers or emitters or cameras, they

want to be able to track using just what the user is wearing

with cameras and sensors looking outward. This requires a

combination of sensors including inertial (for orientation

tracking), and visible light camera(s) and depth camera(s) for

position tracking, and all of the together are used for space

mapping.

With the

HoloLens you first have to help the headset map the space by

looking all around the room you are in, and remap it if there

are any major changes in the position of the furniture.

Google's Project Tango and others use similar combinations of sensors on headsets and smartphones

Rather than looking for a generic solution, specialized VR applications are usually better served using specialized tracking hardware. These pieces of specialized hardware generally replace tracking of the user with an input device that handles navigation

For Caterpillar's testing of their cab designs they placed the actual cab hardware into the CAVE so the driver controls the virtual loader in the same way the actual loader would be controlled. The position of the gear shift, the pedals, and the steering wheel determine the location of the user in the virtual space.

A treadmill can be used to allow walking and running within a confined space. More sophisticated multi-layer treadmills or spheres allow motion in a plane.

https://www.youtube.com/watch_popup?v=mi3Uq16_YQg

in 2024 Disney reinvented the omni-directional treadmill

https://kotaku.com/disney-world-new-holotile-vr-moving-treadmill-floor-1851189340

A bicycle with handlebars allows the user to pedal and turn,

driving through a virtual environment

https://www.youtube.com/watch_popup?v=PDb2zoxSZoQ

HoloLens - https://www.youtube.com/watch_popup?v=Ul_uNih7Oaw

VIVE - https://www.youtube.com/watch_popup?v=rv6nVPPDmEI

Quest - https://www.youtube.com/watch_popup?v=zh5ldprM5Mg

OptiTrack - https://www.youtube.com/watch_popup?v=cNZaFEghTBU

a bit more about latency

Accuracy needs to come from the tracker manufacturers. Latency is partly our fault.

Latency is the sum of:

another important point about latency is the importance of consistent latency. If the latency isn't too bad, people will adapt to it, but its very annoying if the latency isn't consistent - people can't adapt to jitter.

How many sensors is enough?

Tracking the head and hand is

often enough for working with remote people as avatars.

![]()

Most of the current consumer HMDs give you two controllers - one for each hand.

The

VIVE also has a set of separate sensors that can be attached to

feet or physical props, allowing you to track a total of 5

things (head, 2 controllers, 2 others) with the same 2 light

houses. Room-scale optical tracking systems like Vicon and

Optitrack allow developers to add marker balls or stickers to

objects like cereal boxes or tables to track them as well as

people.

Here are some photos of a user putting on sensors and another user dancing with 'the thing growing' at SIGGRAPH 98 in Orlando. This application tracked the head, both hands and the lower back.

Today you could strap on a

sensor like the VIVE Object Tracker to your ankles, wrists, or

attach it to an object like a tennis racket or a baseball bat.

![]()

For Augmented Reality the goals are the same, but doing this kind of tracking 'in the real world' is much harder than in a controlled space. Google Live View sort of works, but its not good enough to be a regular replacement for google maps

https://www.youtube.com/watch_popup?v=w9chZKt2YFQ

One common use of Augmented

reality has been in sports. Having cameras in known locations

within stadiums allows enhancements. For decades sports on TV

has had additional information superimposed on the plane of the

TV screen (score, time left, etc) but for 20 years American

football games have had the line of scrimmage and the 10 yard

line drawn for the viewers at home on the field itself, under

the players. Today these graphics are often augmented with

additional information (who has the ball, direction of play,

etc). One could imagine in future the players could have similar

augmented information while they play.

(image from rantsports.com)

https://en.wikipedia.org/wiki/1st_%26_Ten_(graphics_system)

In the mid 1990s Fox Sports

added IR lights to hockey pucks that allowed cameras and

computers to track the puck and add a graphical tail to better

show viewers at home where the puck was. The public response was

not positive.

https://en.wikipedia.org/wiki/FoxTrax

https://slate.com/culture/2014/01/foxtrax-glowing-puck-was-it-the-worst-blunder-in-tv-sports-history-or-was-it-just-ahead-of-its-time.html

https://www.youtube.com/watch?v=grOttsHuuzE

And while not particularly enhancing the sport itself, for football static perimeter advertising signs around the pitch are been replaced by dynamic LCD and LED signs for those in attendance, but even those advertisements are often replaced by others for viewers on TV / Cable / Internet, and can be replaced by different adds for different locations.

and as tracking and computation and personal AR gear improves there are some interesting possibilities for use in the home and at the stadium:

https://techcrunch.com/2018/06/19/football-matches-land-on-your-table-thanks-to-augmented-reality/

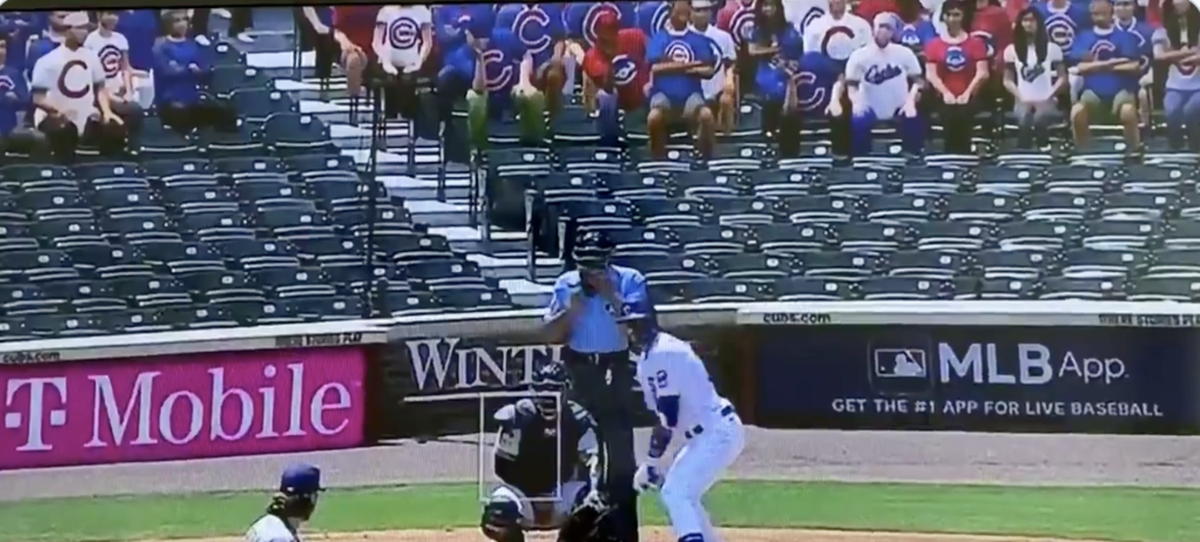

2020 saw some interesting

attempts at putting augmented fans into empty stadiums for the

home audience. Major league baseball used the Unreal Engine to

create virtual moving fans that could wear clothing appropriate

to the local team. The repetitive movements were considered

creepy. Major League Basketball used a less sophisticated

technique where fans could send in photos of their heads to be

placed in the stands, which was also considered creepy.

Note that the highlighting of the strike zone on the baseball telecast is another use of augmented reality

In the simplest Virtual

Reality world there is an object floating in front of you in 3D

which you can look at. Moving your head and / or body allows you

to see the object from different points of view. This is also

the default in an Augmented Reality world.

In Fish Tank

VR setups, or HMDs like the original Rift, the user is typically

sitting with a limited space to move in. With more modern HMDs

like the newer Rift and VIVE, or room scale systems like the

CAVE and CAVE2 the user has a larger space to move walk in, jump

up, kneel down, lie on the floor etc, and arcade level systems

give you larger rooms to move around in using just your

body. Unseen Diplomacy pushes this navigation in a limited

space to the extreme - https://www.youtube.com/watch_popup?v=KirQtdsG5yE

But often you

want to move further, or in effect move a different part of the

virtual world into the area that you can easily move through.

Common ways

of doing this involve using a joystick or directional pad on the

wand to move 'drive' through the space as though you were in a

first person video game, which gives you a better sense of

continuity in the virtual world, though this can risk simulator

sickness, which is why most current consumer HMD games don't do

it. Fallout 4 VR has that as an option - https://www.youtube.com/watch?v=sD7QUrfToms

Another

simple option is using a wand to point to where you want to go

and teleporting from one place to another within the virtual

world, which is what most current consumer HMD games use.

Fallout 4 VR uses this as well- https://www.youtube.com/watch_popup?v=S0D0N2SYHlI

Another

option is to use large gestures such as swinging both your arms

(holding two wands) up and down as though you were jogging to

tell the system you want to walk, or pointing in the direction

you want to go if you have hand and finger tracking. VR

Dungeon Knight uses the jogging metaphor - https://www.youtube.com/watch_popup?v=TTolJoKUcks

If you have superhuman capabilities as in Megaton Rainfall VR, you can have the full Superman / Captain Marvel flying experience - https://www.youtube.com/watch_popup?v=rD0QR2YPzv8

Other

specialized options as discussed above include real devices like

bicycles, treadmills, car interiors where you drive the virtual

car with the actual controls, plane interiors where you fly the

virtual plane with the actual controls, trains, buses, trucks,

etc.

In Augmented

Reality you are typically limited to your actual physical

movements (or the movements of a real car or a real bike) as the

Augmented Reality world is anchored to the real world.

Here are several controllers that we used in the first 10 years of the CAVE. The common elements on these included a joystick and three buttons (same as the 3 buttons on unix / IRIX computer mice.)

Just as HMDs tend to be

similar to each other because they are all based on the

structure of the human head, hand held controllers tend to be

similar because they are based on the structure of the human

hand.

|

The original 'hand made' CAVE / ImmersaDesk wand based on Flock of Birds tracker from 1992-1998. It would have been really handy to have had rapid prototyping machines back then to make these. |

|

The new CAVE/ ImmersaDesk wanda with a similar joystick plus three buttons, based on Flock of Birds tracker from 1998-2001 |

|

InterSense controller, joystick plus 4 buttons, used in the early 2000s |

| When we designed and

built CAVE2 form 2009-2012 we wanted to go with

controllers that were easier and cheaper to replace if

they were broken, so we shifted to PlayStation controllers

with marker balls mounted on the front, again giving us

multiple buttons, a d-pad and joystick up top and a

trigger below. This was the first CAVE controller to be

cable free, so it needs to be charged up like any wireless

game controller. The d-pad added a lot of advantages for interacting with menus. Using the lower trigger on the front was a big advantage over the joystick for navigation. |

Current consumer HMD controllers follow a similar pattern but instead of the more fragile marker balls a circle of sensors is used.

|

The initial VIVE

controllers |

|

The Oculus Touch has similar features in a more compact arrangement |

|

The Oculus Quest has basically the same features in a flipped configuration |

The Valve Index 'knuckles'

controllers are also similar but use a strap to attach the

controllers to your hands, freeing your fingers for gestures.

https://www.theverge.com/2018/6/22/17494332/valve-knuckles-ev2-steamvr-controller-development-kit-shipments-portal-moondust-demo

Different controllers have different numbers of buttons and controls.

More buttons

give you more options that can be directly controlled by the

user, but may also make it harder to remember what all those

buttons do. More buttons also makes it harder to instruct a

novice user what he/she can do. Asking a new user to press the

'left button' or the 'right button' is pretty easy but when you

get to 'left on the d-pad' or 'press the right shoulder button'

then you have a more limited audience that can understand you.

Most VR

software today automatically brings up context sensitive

overlays about what the various controller buttons do to help

users get familiar with the controls.

Game

controllers (and things that look like game controllers) have a

big advantage in terms of familiarity for people who play games,

and have often gone through pretty substantial user testing, and

are often relatively inexpensive to replace.

One of the main uses of controllers is to manipulate objects in the virtual world.

The user is

given a very 'human' interface to VR ... the person can move

their head or hand, and move their body, but this also limits

the user's interaction with the space to what you carry around

with you. There is also the obvious problem that you are in a

virtual space made out of light, so its not easy to touch,

smell, or taste the virtual world, though all of the senses have

been used in various projects.

Even if you want to just 'grab' an object there are several issues involved.

A `natural'

way to grab a virtual object is to move your hand holding a

controller so that it touches the virtual object you want to

manipulate. At this point the virtual environment could vibrate

the controller, or add a halo to the object, or make the object

glow, or play a sound to help you know that you have 'touched'

the object. You could then press a button to 'grab' the object,

or have the object 'jump' into your hand. Modern controllers

like the Valve Index controllers that can sense where your

fingers are, and have a strap that allows you to open your hand

without dropping the controller, can allow you to actually

'grab' a virtual object using a grabbing motion, while still

having access to traditional buttons.

The Quest

allows you to discard the controllers and use only your camera

tracked hands for certain environments. If the cameras can

successfully track your hands, which is normally true but not

always true, you can see your virtual hands moving fluidly, and

use very natural motions to grab objects, but when it doesn't

work it can be very aggravating.

While this kind of motion is very natural, navigating to the object may not be as easy, or the type of display may not encourage you to 'touch' the virtual objects. It can also be impractical to pick up very large objects because they can obscure your field of view. In that case the users hand may cast a ray (raycasting) which allows a user to interact at a distance. One fun thing to try in VR is to act like a superhero and pick up a large building or train and throw them around - turns out that when you pick them up you cant see anything else - 'church chuck'.

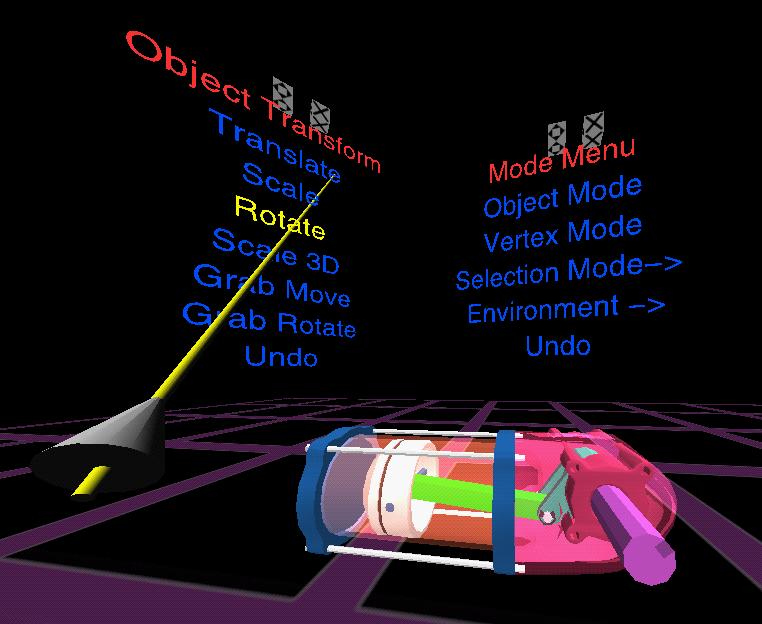

Menus

One common way of interacting with the virtual world is to take the concept of 2D menus from the desktop into the 3D space of V.R. These menus exist as mostly 2D objects in the 3D space

This can be extended from simple buttons to various forms of 2D sliders.

These menus

may be fixed to the user, appearing near the users head, hand,

or waist, so as the user moves through the space, the menus stay

in a fixed position relative to the user. Alternatively the

menus may stay at a fixed location in the real space, or a fixed

location in the virtual space.

e.g. from the current crop of HMD experiences:

| Since Skyrim was designed as a desktop game it has a traditional menu structure. For the VR version that interface maps directly over with a huge menu appearing in the VR world with the controller buttons being used to move through the menu structure an select items. They correctly used the trackpad / joystick on the controllers to move through the menu system rather than relying on pointing directly at menu items to select them, as that can get fatiguing. |  |

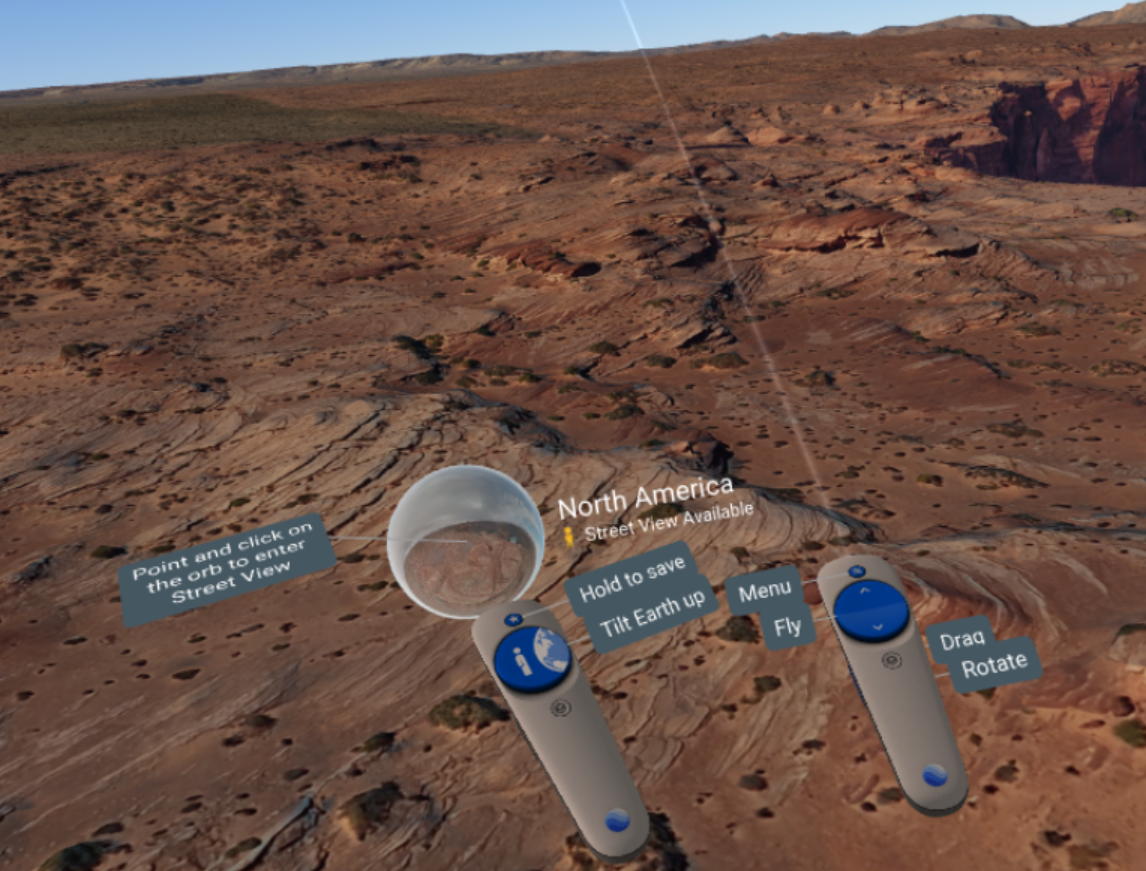

| Google Earth VR maps the controls to buttons on the controller with tooltips floating nearby - as the menu options change the tooltips change. |  |

| Vanishing realms has a

nice UI at the user's waist where you store keys and food

and weapons and then to interact the user intersects that

menu with one of the controllers as though you were

reaching down to grab something off your belt. |

|

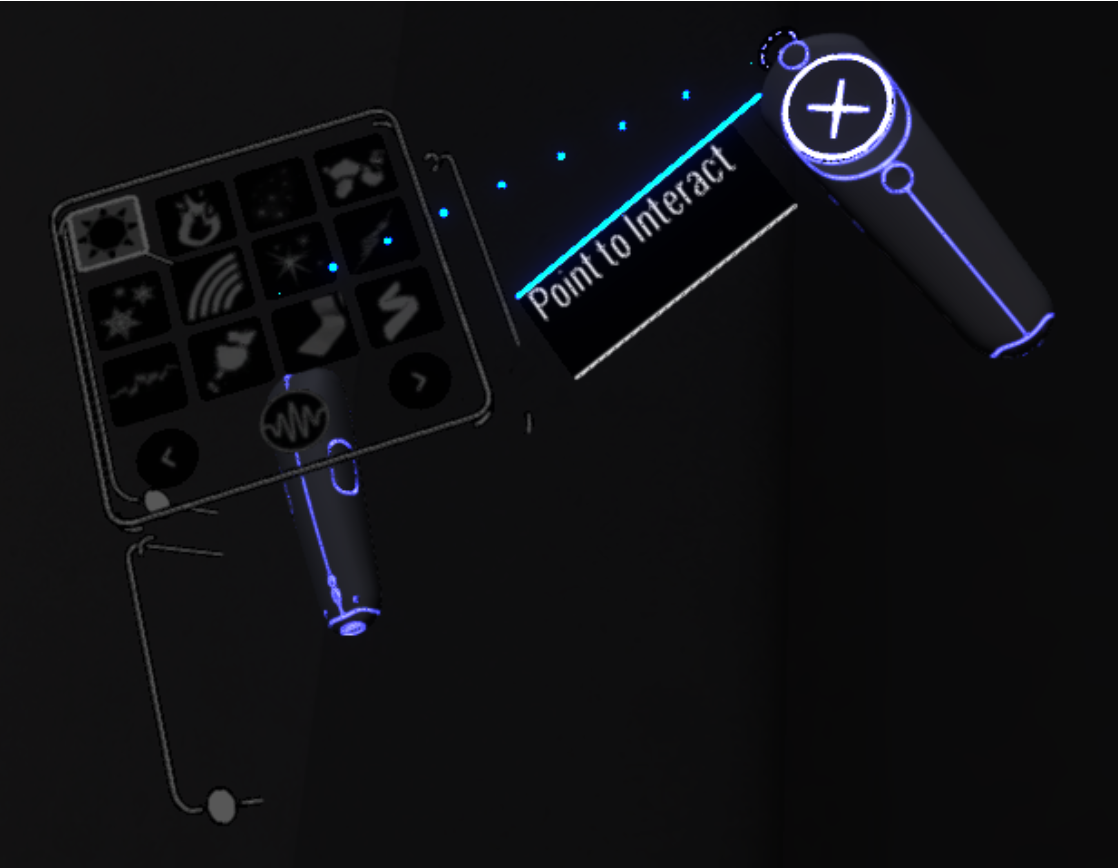

| Tilt Brush has a nice

2-handed 3D UI where the multi-faceted menu appears in one

hand and you select from it with the other hand |

|

| Bridge Crew has a nice

UI with (lots of) buttons that you have to 'press' with

your virtual hands (controller) - including virtual

overlay text to remind you which is which |

|

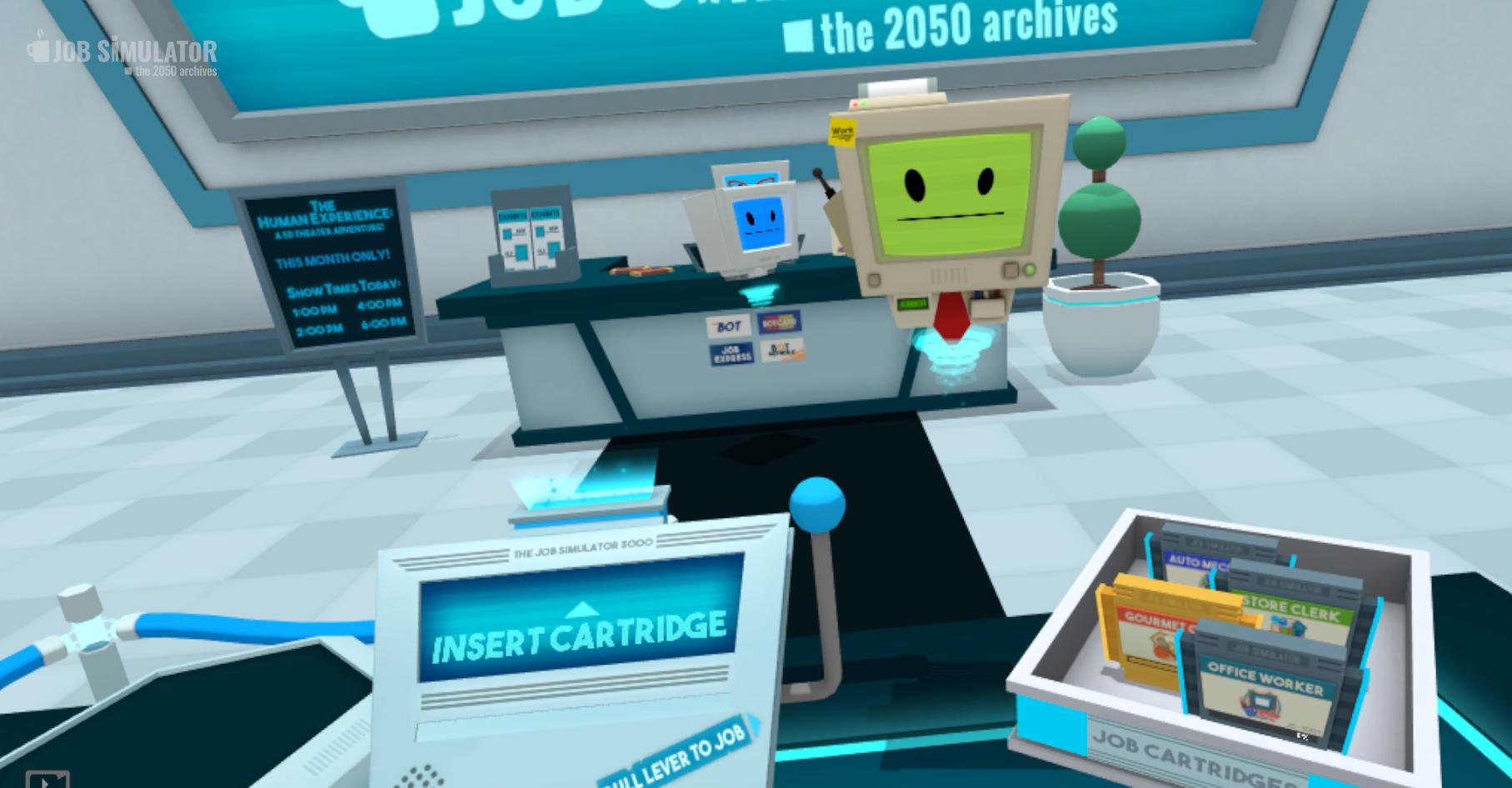

| Job Simulator has a

nice UI built into the 3D environment itself based on

object manipulation |

|

Whether hand held or scene based, menus may collide with other objects in the scene or be obscured by other objects in the scene. One way to avoid this is to turn z-buffering off for these menus so they are always visible even when they are 'behind' another object.

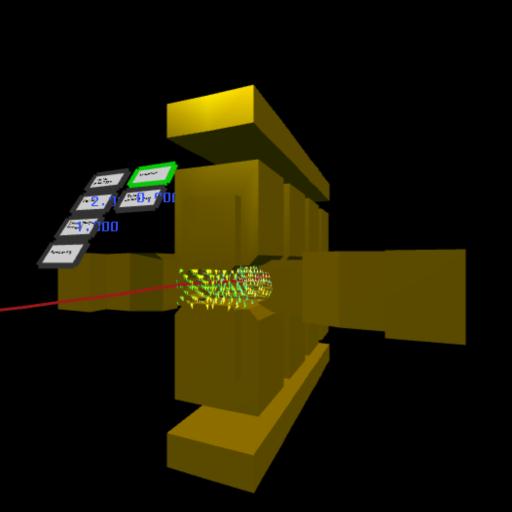

When you get

into more complicated virtual worlds for design or visualization

the number of menus multiplies dramatically as does the need for

textually naming them so more traditional menus are more common

in these domains. There are several ways to activate these kinds

of menus - using the wand as a pointer to select menu items,

using a d-pad to move through a menu, intersecting the wand

itself with the menu items. Using a d-pad tends to work better

than a pointer if you have a single menu to move through as it

can be hard to hold your hand steady when pointing at complex

menus.

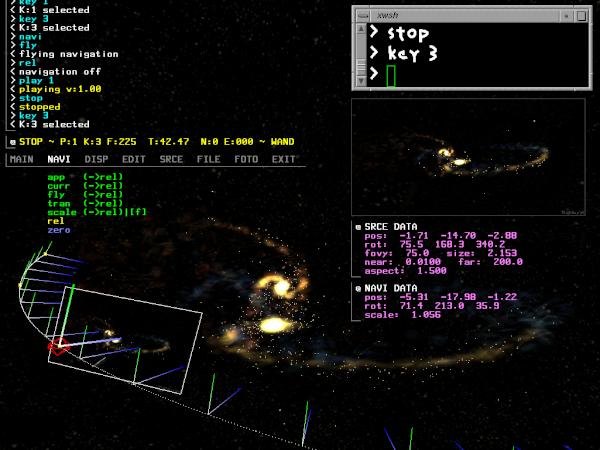

Another

option is to use a head-up display for the menu system where you

look at the menu item you want to select and choose it with a

controller. Here is a version of that we did back in 1995 with

the additional option for selecting the menu options by voice.

The HoloLens uses a similar menu system.

In Augmented

Reality this can be trickier since you also have the real world

involved, both in terms of the graphics, and in terms of the

people you share the world with. Google glass's physical control

on the side of glass worked OK for small menu systems, augmented

by voice. Microsoft's HoloLens pinch gesture for selecting

within the field of view of the camera didn't work quite so

well, but the physical button they provided did, as long as you

keep the physical button with you.

voice

One way to

get around the complexity of the menus is to talk to the

computer via a voice recognition system. This is a very natural

way for people to communicate. These systems are quite robust,

even for multiple speakers given a small fixed vocabulary, or a

single speaker and a large vocabulary, and they are not very

expensive. Alexa and Siri have moved into our homes and are

pretty good at understanding us, but they do sometimes trigger

when we don't expect them to.

However, voice commands can also be hard to learn and remember.

In the case of VR applications like the Virtual Director from the 90s, voice control was the only convenient way to get around a very complicated menu system

The HoloLens makes effective use of voice to rapidly move

through the menus without needing to look and pinch.

Ambient

microphones do not add any extra encumbrance to the user in

dedicated rooms, and small wireless microphones are a small

encumbrance.

HMDs or the

controllers typically include microphones which work pretty well

so adding them onto AR glasses will be easy. Socially it is a

question whether it will be acceptable for people to be talking

to their virtual assistants.

Problems can

occur in projection-based systems since there are multiple users

in the same place and they are frequently talking to each other.

This can make it difficult for the computer to know when you are

talking to your friends and when you are talking to the

computer, as can happen with Alexa and Siri. There is a need for

a way to turn the system on and off, typically with a keyword,

and often the need for a personal microphone if the space is

noisy.

Voice is

becoming much more common now for our smartphones and our homes,

and our cars, as the processing and the learning can be

offloaded into the cloud.

gesture recognition

This also

seems like a very natural interface. Gloves can be used to

accurately track the position of the user's hand and fingers.

Some simple gloves track contacts (e.g. thumb touching middle

finger), others track the extension of the fingers. The former

are fairly robust, the latter are still somewhat fragile. Camera

tracking as in the Kinect, the Quest, and AR systems can do a

fairly good job with simple gestures, and are rapidly improving

but still suffer from occlusion issues.

Learning new

gestures may take time, as they did for tablets and trackpads.

The

possibilities with tracking hands improve if you have two of

them. Multigen's SmartScene from the mid 90s was a good example

using two Fakespace Pinchgloves for manipulation. The two handed

interaction of Tilt Brush seems like a modern version of the

SmartScene interface using controllers.

Full body

tracking involving a body suit or gives you more opportunities

for gesture recognition, and simple camera tracking does a

pretty good job with gross positions and gestures.

One issue

here, as with voice, is how does the computer decide that you

are gesturing to it and expect something to happen, as opposed

to gesturing to yourself or another person.

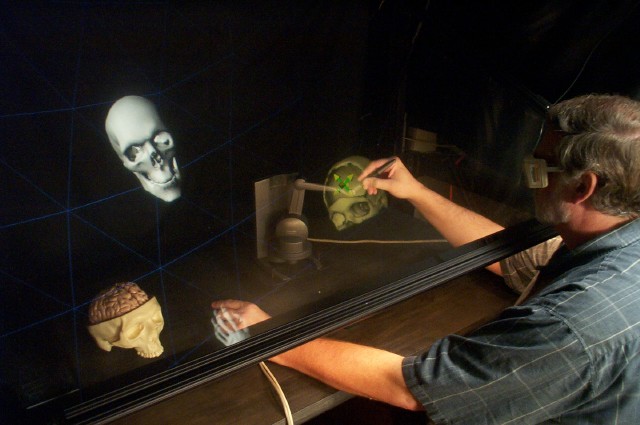

Haptics

a PHANToM in use as part of a cranial implant modelling

application with a video here

- https://www.youtube.com/watch_popup?v=cr4u69r4kn8

The PHANToM

gives 6 degrees of freedom as input (translation in XYZ and

roll, pitch, yaw) 3 degrees of freedom in output (translation in

XYZ)

and a nice introductory video on the Phantom here

- https://www.youtube.com/watch_popup?v=0_NB38m86aw

You can use

the PHANToM by holding a stylus at the end of its arm as a pen,

or by putting your finger into a thimble at the end of its

arm.

The 3D workspace ranges from 5x7x10 inches to 16x23x33 inches

and a nice introductory video

here - https://www.youtube.com/watch_popup?v=0_NB38m86aw

There is also

work today using air pressure and sound to create a kind of

sense of touch, though not as strong as a PHANToM, they operate

over a wider area than the PHANToM.

Other issues

We often compensate for the lack of one sense in VR by using another. For example we can use a sound or a change in the visuals to replace the sense of touch, or a visual effect to replace the lack of audio.

In projection

based VR systems you can carry things with you, for example in

the 90s we could carry PDAs giving an additional display,

handwriting recognition, or a hand-held physical menu system.

Today smart phones or tablets provide the same functionality

with infinitely more capabilities.

In smaller

fish tank VR systems, or in hybrid systems like CAVE2 you have

access to everything on your desk which can be very important

when VR is only part of the material you need to work with.

In Augmented Reality you have access to everything in the real world, so interacting with the real world is pretty much the same as before, especially with a head mounted AR system.

last revision 1/10/2024