In the last 13 classes we have talked about a lot of algorithms and techniques involved in computer graphics. In this class I want to talk about some of the toys that I get to play with and deal with some current topics in the area of computer graphics.

online, you can click here to see some images created by members of the Electronic Visualization Laboratory here at UIC. Each lecture has a different set of images (collect em all!)

Earlier on in the class we talked about all of the other cues to depth other than stereo vision, but stereo vision is the dominant way of determining the depth of anything really close.

In order to make use of stereopsis in computer graphics you must be able to send slightly different images to the left and right eyes.

There are a couple different ways to do this: the '3D movie way', and the 'viewmaster' way.

The '3D movie way'

As a kid you probably read some 3D comics which came with red/ blue glasses, or you might have even seen a movie with these paper glasses (either with the the red/blue glasses from way back in the 50s with films like 'House of Wax', or using the polarized filter glasses during the 3D revival in the mid 80s.) The Documentary Film Group at the University of Chicago still ocassionally shows an old 3D movie like 'Creature from the Black Lagoon' which draw pretty good crowds.

The images look

like the one below (and if you have a pair of red/blue glasses and a

correctly calibrated monitor this image will become 3D.) The coloured

lenses make one of the images more visible to one of your eyes and less

visible to your other eye, and vice-versa.

The 'viewmaster way'

As a kid you probably also had a viewmaster(tm) and saw the cool 3D pictures. Those worked exactly the same way. Your left eye was shown one image on the disc, while your right eye was shown a different image. The images came in pairs and as you pulled the lever on the side a different pair of images would appear.

One inexpensive way to do this is to draw two slightly different images onto the screen (or onto a piece of paper), place them next to each other and tell the person to fuse the stereo pair into a single image. This is easy for some people, very hard for other people, and impossible for a few people.

Some of these images require your left eye to look at the left image, others require your left eye to look at the right image.

To see the pictures below as a single stereo image look at the left image with your right eye and the right image with your left eye. If you aren't used to doing this then try this: Hold a finger up infront of your eyes between the two images on the screen and look at the finger. You should notice that the two images on the screen are now 4. As you move the finger towards or away from your head the two innermost images will move towards or away from each other. When you get them to merge together (that is you only see 3 images on the screen) then all you have to do is re-focus your eyes on the screen rather than on your finger. Remove your finger, and you should be seeing a 3D image in the middle.

It can be a bit of a strain to get 3D images this way, so animated 3D computer graphics are not done this way.

You either have to isolate a person's eyes and feed a different video signal to each eye (like an animated viewmaster) or have a single screen, and figure out a way to force the correct eye to see the correct image:a high-tech version of the red/blue glasses. Both techniques are currently in use. The former is relatively straightforward, and variants depend on how you isolate the eyes. In the latter there are several different ways of getting the correct eye to see the correct image - you can use the 50s coloured lenses trick. You can polarize the light and wear polarized glasses so that only the correct light hits the correct eye. You can also alternate images very quickly (show the image for the left eye, then the image for the right eye, then the image for the left eye, etc) and at the same time block the eye that shouldn't be seeing anything - this is similar to how a motion picture generates the illusion of movement so you dont see the film sliding through the camera, only the individual frames, but here we are doing it at the eye for each eye.

As the image is generated or projected on a flat screen, every part of the image is the same distance from your eye so there is no way for parts of the image to be in focus while other parts are out of focus - which makes things slightly unrealistic. There is also a problem in that we have some signals telling the brain that an object is floating in front of a screen, but other signals telling the brain that the image is on the screen.

To give the illusion of smooth motion you need at least 10 and preferably 15 frames per second into each eye. And the graphics must be synched so the appropriate pair of 3D images are being sent 'at the same time.'

There is a lot of stuff out there calling itself virtual reality, and what VR is depends on who you ask. VR is still a rather hot buzzword, and as long as it is there will be lots and lots of things that are called VR. 'VR' is itself a rather passe buzzword with 'immersive environments' and 'virtual worlds' becoming more popular (and less trendy) descriptions.

Ivan Sutherland is given a lot of credit for the creation of VR. In the mid 1960s he proposed 'the ultimate display' which he described as follows:

The ultimate display would, of course, be a room within which the computer can control the existence of matter. ... With appropriate programming such a display could literally be the Wonderland into which Alice walked.

This is, of course, a very good description of the Holodeck on 'Star Trek.' While we don't have the ability to generate matter yet, we can control the existance of computer graphics generated matter.

The most important requirement is immersive 3D graphics - that is you should feel surrounded by a computer generated world, not just looking at one from the outside as in most video games.

There is a lot of different ways to do this. The most common VR hardware is a head-mounted display. There are also Boom mounted displays, and 'fish tank' VR systems using computer monitors.

A couple HMDs: A BOOM:

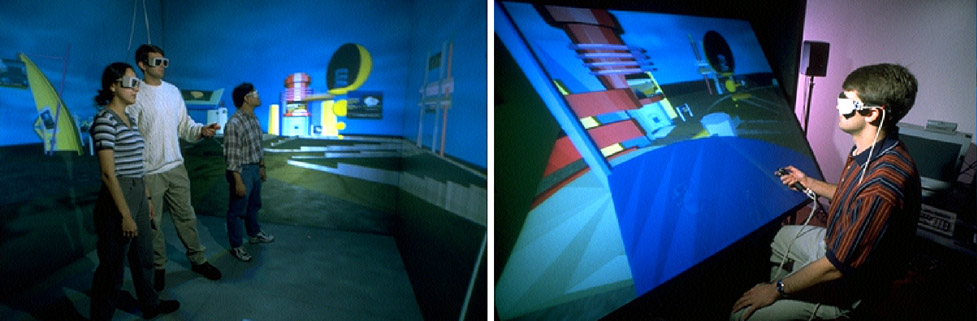

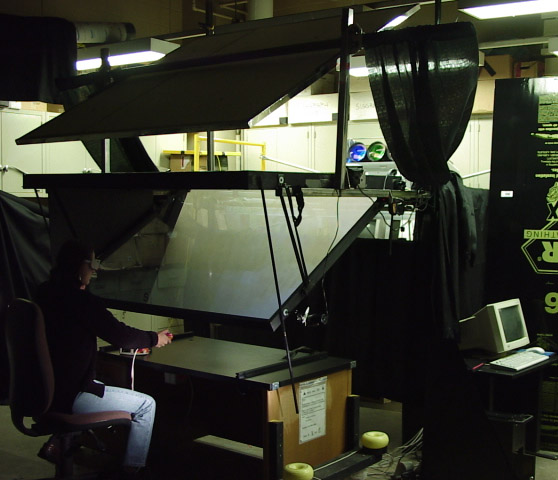

At the Electronic Visualization Lab here on campus two VR displays were developed:

The CAVE (1992) and the ImmersaDesk (1995)

There are currently several manufacturers of projection-based VR displays (like the CAVE and the ImmersaDesk) and other shapes such as wide slightly curved walls, domes, flat tables, etc.

How does this affect the creation of the software?

So far all of the calculations in the class have been done from a single viewpoint. We now need one viewpoint for each eye to give us two images which are slightly different. This is actually pretty easy as all we need to do is move the viewpoint slightly.

You can imagine the current viewpoint we have been using as sitting in between your eyes on your nose. Now we will move the viewpoint slightly to the left to match the location of your left eye and compute the image, then move the viewpoint slightly to the right to match the location of your right eye and compute that image. In general this will mean that the program will run twice as slow.

When done correctly the effect is incredibly believable.

Another important feature of VR is naturally tracking where the user is looking - that is if the user wants to turn to the right the user should turn their head to the right - no keyboard, no joystick, just natural motion, and the world should update appropriately.

This can cause a problem if the images are not updated fast enough. If your brain 'knows' that you just turned to the right but your eyes are telling you that you didn't (because the world didn't appear to move) you may get sick. Generating and displaying the graphics in real-time is a requirement.

The goal is to create 'the illusion of believability.'

While VR has been primarily interested in visual feedback to the user, audio feedback is also very important in creating a believable or useable environment. Other research concentrates on haptic devices to deal with the sensation of touch in virtual environments. There are a couple groups working on smell, but nothing on taste in these environments yet. Primarily the experiences are still based around sight and sound.

Visualization

VR has been very good at architectural walkthroughs, multidimensional visualization, and games.

It is good for visiting places that don't (yet) exist, visiting places that are inhospitable, places that are visual representations of mathematical or mulridimensional spaces, medical visualization, and the creation of imaginary spaces.

Architectural walkthroughs and evaluations of designs has been the most sucessful application of VR. These could be the interiors of new buildings or designs for new cars. There is some evidence that VR is a good visualization tool. VR may be a good teaching and training tool but there is a lot more work to be done in that area.

Collaboration

Collaboration is currently a very hot area in many fields ... how can remote people sucessfully collaborate on a project. In VR these remote users are typically sharing the same virtual space and cooperating on some taks within that space. The major benefit that VR may give here is that the users sharing the space may be able to customize their view of the space to their own background. So while many collaboration systems strive to replicate face-to-face meetings, VR may be 'better than being there.'

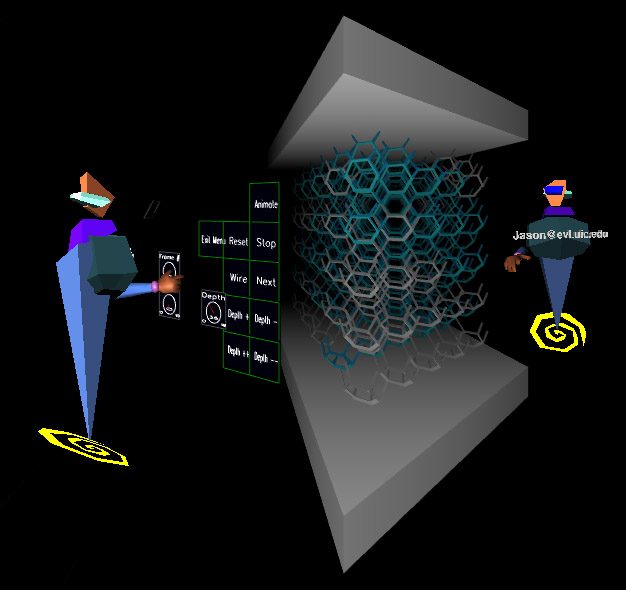

An important part of doing this sucessfully is establishing and maintaining a sense of 'presence' in the virtual environment. This is commonly done by giving each participant a virtual body in the virtual environment so the other users can see where this user is. These virtual bodies are called 'avatars.' As well as being able to see how other users relate to the virtual space it is important for the various users to be able to communicate directly with each other through live voice and through live video.

Soma avatars - Jason interacting with an articulated avatar on the left and Andy interacting with a video avatar on the right

![]()

Isolation

VR can give a richer interaction with a virtual world but at the same time it can limit your interaction. Interaction in VR is based on what you wear or carry into the virtual space. It is difficult to use a keyboard in VR. It is difficult to talk on the telephone while immersed in VR. The more immersed you are in the virtual world the less contact you have with the 'real world' and vide-versa.

Current Themes at EVL

Virtual Design (done by companies such as GM and Caterpiller)

Scientific Visualization:

Medical Visualization:

Cultural Heritage:

Conceptual Learning:

all of this trying to make use of very high speed fibre-optic networks

New devices:

PARIS - head tracking, hand tracking, and haptic feedback

We have also been looking at less expensive devices such as:

Hand tracked Plasma Panel

5 x 3 Tiled Display driven by a 16-node linux cluster

GeoWall - www.geowall.org

Here are some movies if you would like to see a bit more:

www.evl.uic.edu/aej/QT/AndyFliks.html

and if you are interested in seeing the CAVE, usually once per term there is a Masters of Fine Arts show in one of our CAVEs. These are advertised in the school paper and are free to the public.

This class has dealt with the basics of how computer graphics are generated and given you some experience in generating interactive computer graphics. If you find this interesting there are several ways to continue:

| CS 588 - Comp. Graphics II | current topics in computer graphics - generally with students presenting papers from the most recent couple years of SIGGRAPH publications, and creating projects based around these ideas. |

There are also several related graduate classes:

| CS 522 - Human-Computer Interaction |

| CS 523 - Multi-Media Systems |

| CS 527 - Computer Animation |

| CS 528 - Virtual Reality |

Special thanks to Maria for loaning me with her 488 class notes back in 1996 which were a great guideline in preparing the first version of these notes.

Also, thanks to the members of the Electronic Visualization Lab for sharing their artwork.

The Final Exam

The final exam will be the same basic format as the midterm.

last revision 12/02/03