images from http://www.animationartist.com

Sebastian

Grassia's

SIGGRAPH 95 Inverse Kinematics notes

Motion Tracking

latest iteration of rotoscoping

What do we want to capture

- facial movements - e.g. use a human actor to animate the facial features of a cat

- figure motion within a limited space - e.g. a person doing tai chi chuan

- figure motion within an extended space - e.g. small group playing basketball or fighting

Types of Tracking

Currently

Optical

is the most popular way of doing the work.

How many sensors are needed?

Talent?

What to do after capturing the information?

Current systems allow you to apply the character's motion to the CG character in real-time to see if everything is working correctly (or at least correctly enough that you can move on to the next shot

Motion

Capture

studios charge > $10,000 per hour

People in the backgrounds in Titanic, reissue of classic Star Wars

Secondary

alien

characters in Star Wars new trilogy

Main

fantasy

characters in Shrek, King Kong

Main

human

characters in Final Fantasy - the Spirits Within and Final Fantasy

Advent Children

For Final Fantasy there was no facial motion capture

For capturing the action the set was 8 by 10 by 2.5 metres with 16 cameras

Some

simple

examples of motion capture in the CAVE using only two trackers.

Examples

from

King Kong (2005), and previously Walking with Dinosaurs had some nice

examples.

In

a couple weeks we will take a look at EVL's motion capture facility to

get you ready to make use of it in the third project.

Kinematics

"The branch of mechanics concerned with the motions of objects without being concerned with the forces that cause the motion."

Forward Kinematics

Given a linked structure with joints and the angles at each joint, forward kinematics gives us the position at the end of the structure (end effector). There is a solution.

Unfortunately

from

an animation point of view we are often interested in giving a position

for the end of the linked structure and having the joints realistically

do what they need to do to accomplish that. For example animating a

person reaching up to grab a particular book off a bookshelf, or

bending down to pick up a book off the floor. This brings us to ...

goal directed motion

There

may be 0, 1,

or more solutions - i.e. if I want to grab a particular book on the

shelf with my right hard, I

might not be able to reach it from where I are currently standing no

matter how I move my upper arm, lower arm and wrist, or

there may be several different possible upper arm / lower arm / wrist

orientations that satisfy

my hand (end effector) grabbing the book.

overconstrined

system - there is no solution

underconstrained system - many solutions

A

lot of the

research in this area comes from robotics - you have an articulated

robot arm and you want it to grab the book on the shelf for you - how

do you set all the appropriate joints to do that. Robots have

particular types of joints; humans have particular types of joints,

differet animals have different types of joints.

For

one or two simple joints then there may be a small finite number of

analytical solutions, but living creatures are much more complicated.

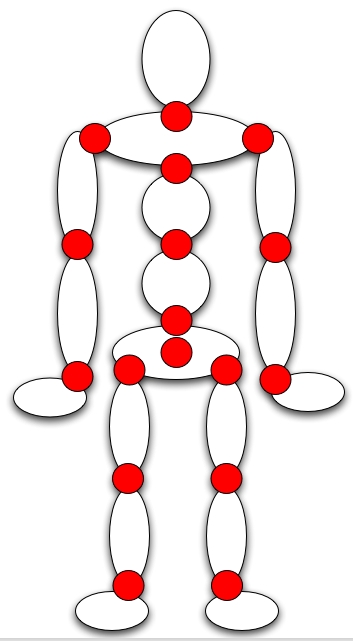

To model a human being correctly its important to understand the differing degrees of freedom in joints of human body

http://ovrt.nist.gov/projects/vrml/h-anim/jointInfo.html

Given the number of joints in the hands, they can be particularly complicated. How about something a little simpler:

Its also important that the motion not only be possible but that it match the way humans move - ie if we have the choice of moving our hand or our entire arm we will minimize our effort and move just the hand. While a computer knows exactly where our hand will end up, human beings are constantly making adjustments so our movement wont be as smooth or predictable as that generated by a computer through simple interpolation.

Here

are some

samples from a nice

set of slides by Bill Baxter at UNC on inverse kinematics

Basically

what we want to do is incrementally solve the problem. At each time

step we work out how to move/rotate each joint to move the end effector

towards the goal. The simplification is that for a small enough time

period the actual angular motion can be approximated by linear motion.

given

a set of joint angles θ (θ1, θ2, θ3,

etc, and the end effector located at ex, ey, ez

then:

e = f(θ) so given all of the θs we can compute e

what

we want is that given e we can compute (a, the best) set of θs: θ = f-1(e)

We

are going to use the Jacobian which is a matrix of partial derivatives

of all of the angles relative to the position of the end effector. In

the more complicated case you would also deal with the rotation of the

end effector and add rows into the Jacobian for the roll, pitch, and

yaw of the end effector

Jacobian

J = δ e / δ θ which can be expanded into this matrix

| δ ex / δ θ1 δ ex / δ θ2 ... δ ex / δ θn |

|

δ ey / δ θ1 δ ey / δ

θ2 ... δ ey / δ θn | = J

|

δ ez / δ θ1 δ ez / δ θ2

... δ ez / δ θn |

Its important that all of the values in the Jacobian are in the

same coordinate system.

Each

term in the matrix relates a change in that joint to a change in the

end effector. Each column gives the change in velocity of the end

effector

relative to the change in a given joint angle.

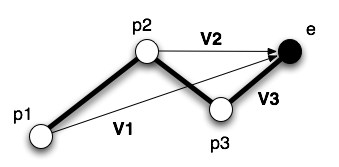

So how do we compute the Jacobian? For a simple example lets say that the axis of rotation for these joints is going into the page and declare that p1 is at 0,0,0 then:

ni

= unit vector pointing out of the page

pi = x,y,z location of joint i

Vi = e - pi (the vector from pi to e)

Ji (ith column) = ni x Vi

| ((0,0,1) X e)x (0,0,1) X (e - p2)x (0,0,1) X (e - p3)x |

|

((0,0,1) X e)y (0,0,1) X (e - p2)y

(0,0,1) X (e - p3)y | = J

| ((0,0,1) X e)z (0,0,1) X (e - p2)z (0,0,1) X (e - p3)z |

Given the current location of the end effector, and the location we want the end effector to be at we can say:

Δ e

= etarget - ecurrent

Δ θ = J-1

Δ e

θt+1 = θt +

Δ θ

so

we need to compute the inverse Jacobian (or a pseudo inverse if J is

not invertable), and as long as Δ e is a small step the system will

converge. If the step is too big the end effector may move away from

the goal. Once the joint angles are changed then the Jacobian must be

recomputed so the next time it is used the linear approximation will be

accurate.

more

on the

jacobian at the ubiquitous wikipedia:

http://en.wikipedia.org/wiki/Jacobian

here

are some more related notes:

http://freespace.virgin.net/hugo.elias/models/m_ik2.htm

Here

is a simpler / faster algorithm called cyclic coordinate descent from A

Hitchhiker's Guide to Virtual Reality by McMenemy and Ferguson

loopCounter = 0;

maxLoopCounter = some upper limit;

while

(loopCounter < maxLoopCounter) and (target not reached)

currentLink = last

link in the chain (the pivot connected to the end effector)

while

(currentLink exists)

{

Rotate currentLink so that a line

from currentLink through the end effector passes through the target

// that will do the rotation all

at once, for smoother motion

// you probably want to

define a max amount

of rotation per iteration

// Choose an axis of rotation

that is perpendicular to the plane defined

// by any two of the linkages

meeting at the point of rotation.

// Do not violate any angle

restrictions

currentLink = next link in the chain (away from the

end effector)

}

loopCounter += 1;

flocking / artificial life

Class has been cancelled today due to events on the East Coast.

more on kinematics / motion tracking / people