In the simplest virtual reality world there is an object floating in front of you in 3D which you can look at. Moving your head and / or body allows you to see the object from different points of view.

Adding navigation allows you to fly through a

virtual space - in effect moving the virtual space itself, via a

joystick, or bicycle or treadmill - something beyond moving your

own body.

Here are several controllers that we used in the

first 10 years of the CAVE. The common elements on these

included a joystick and three buttons (same as the 3 buttons on

unix / IRIX computer mice.)

original 'mass produced' CAVE / ImmersaDesk wand based on Flock of Birds tracker from '92-98

New CAVE/ ImmersaDesk wanda based on Flock of Birds tracker from 98-2001

InterSense controller since 2001

More buttons gives you more options that can be

directly controlled by the user but may also make it harder to

remember what all those buttons do. More buttons also makes it

harder to instruct a novice user what he/she can do. Asking a

new user to press the 'left button' or the 'right button' is

pretty easy but when you get to 'left on the d-pad' or 'press

the right shoulder button' then you have a more limited audience

that can understand you.

Game controllers (and things that look like game

controllers) do have a big advantage in terms of familiarity for

people who play games, have often gone through pretty

substantial user testing, and are often relatively inexpensive

to replace.

Navigation can include walking, swimming, flying,

teleporting, etc.

The NPSNET group is interested in large scale military training and for ground troops it is more natural for them to move through the virtual battlefield as they would move through the real battlefield.

Another important issue in

navigation is 'wayfinding' ... how to help people not get lost

in a VR environment. In the real world we use a variety of maps

and directions for this purpose. In VR we can allow

the user to bring up a typical 2D map or a set of directions in

either readable or audible form ('go left at the yellow tree'),

or have the system take over moving the user.

The WIM (World in Miniature) project gave the user a

small portable 3D overview of the scene as a map as shown below,

combining the inside-out view you normally have with an

outside-in view.

More interesting is the ability to manipulate objects in the virtual world, or manipulate the virtual world itself.

The user is given a very 'human'

interface to VR ... the person can move their head or and, and

move their body, but this also limits the user's interaction

with the space to what you carry around with you. There is also

the obvious problem that you are in a virtual space made out of

light, so its not easy to touch, smell, or taste the virtual

world, though all of the senses have been used in various

projects.

Even if you want to just 'grab' an object there are several issues involved.

A `natural' way to grab a virtual object is to move your hand so that it touches the virtual object you want to manipulate. You could then press a button to 'grab' the objects, or have the object 'jump' into your hand.

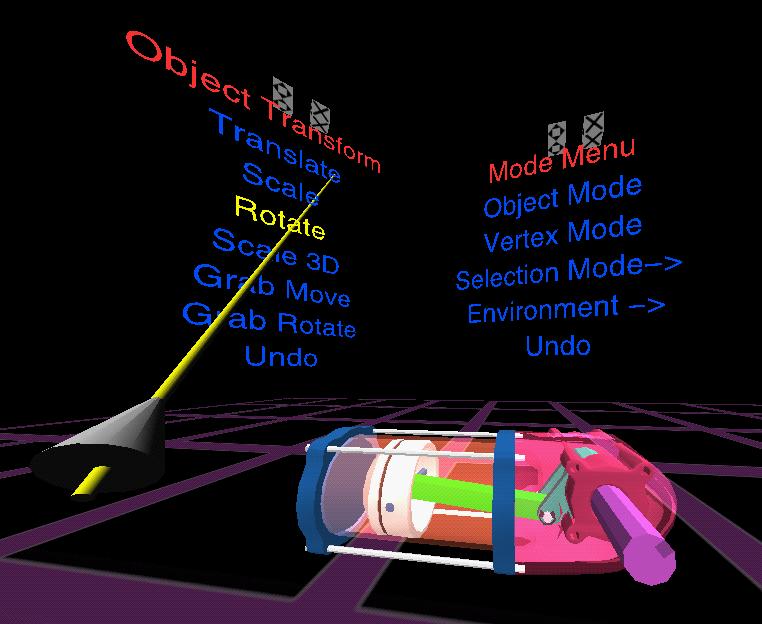

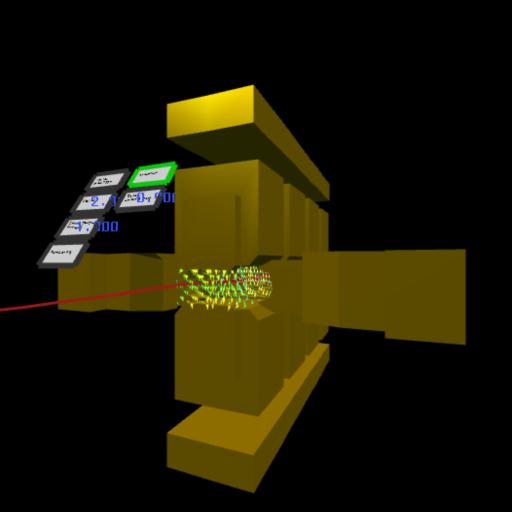

While this kind of motion is very natural, navigating to the object may not be as easy or the type of display may not encourage you to 'touch' the virtual objects. It can also be impractical to pick up very large objects because they can obscure your field of view. In that case the users hand may cast a ray (raycasting) which allows a user to interact at a distance.

'church chuck'

Menus

One common way of interacting with the virtual world is to take the concept of 2D menus from the desktop into the 3D space of V.R. These menus exist as mostly 2D objects in the 3D space

This can be extended from simple buttons to various forms of 2D sliders.

These menus may be fixed to the user, as the user moves through the space, the menus stay in a fixed position relative to the user. Alternatively the menus may stay at a fixed location in the space.

In either case these menus may collide with other objects in the scene. One way to avoid this is to turn z-buffering off for these menus so they are always visible even when they are 'behind' another object.

Most commonly the user uses their hand to select menu options, either by moving their hand to intersect with the menu (similar to using a mouse on a desktop), or by using ray-casting to point at a menu option from a distance. Another option is to use a head-up display for the menu system.

Another option is to extend the concept of a 2D menu in a 3D space, to that of a true 3D menu with menu options in multiple dimensions.

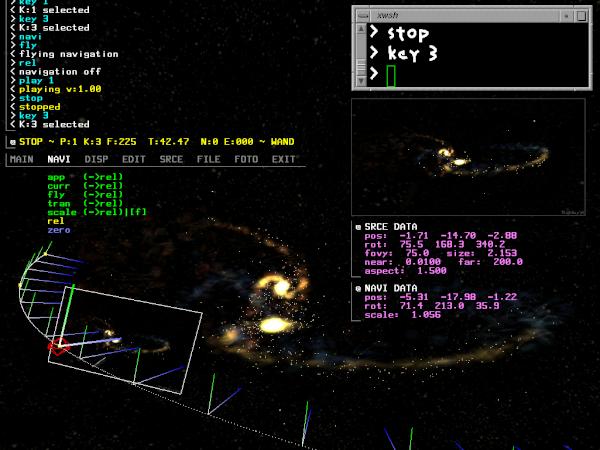

voice

One way to get around the complexity of the menus is to talk to the computer via a voice recognition system. This is a very natural way for people to communicate. These systems are quite robust, even for multiple speakers given a small fixed vocabulary, or a single speaker and a large vocabulary, and they are not very expensive.

In the case of VR applications like the Virtual Director, voice control is the only convenient way to get around a very complicated menuing system

Ambient microphones do not add any extra encumberance to the

user, and small wireless microphones are a small encumberance.

Problems can occur in projection-based systems since there are multiple users in the same place and they are frequently talking to each other. This can make it difficult for the computer to know when you are talking to your friends and when you are talking to the computer. There is a need for a way to turn the system on and off, and often the need for a personal microphone.

gesture recognition

This also seems like a very natural interface. All gloves track the position of the user's hand. Some simple gloves track contacts (eg thumb touching middle finger), others track the extension of the fingers. The former are fairly robust, the latter are still somewhat fragile.

The possibilites with gloves improve if you have two of them. Multigen's SmartScene is a good example using two Fakespace Pinchgloves for manipulation.

Full body tracking involving a body suit gives you more opportunities for gesture recognition

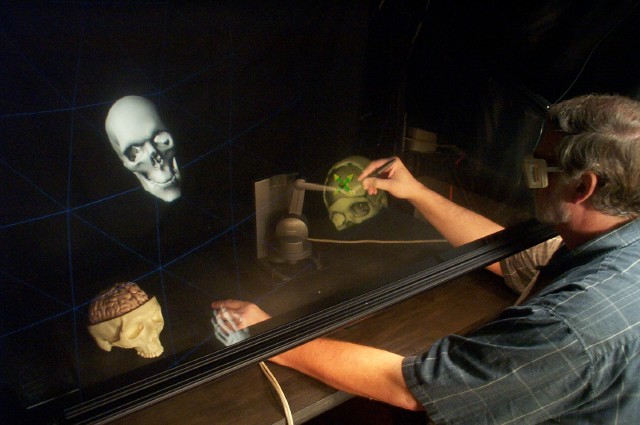

Haptics

Several different models of PHANToMs

a PHANToM in use as part of a cranial implant modelling

application

The PHANToM gives 6 degrees of freedom as input (translation in XYZ and roll, pitch, yaw) 3 degrees of freedom in output (translation in XYZ)

You can use the PHANToM by holding

a stylus at the end of its arm as a pen, or by putting your

finger into a thimble at the end of its arm.

The 3D workspace ranges from 5x7x10 inches to 16x23x33 inches

Other issues

We often compensate for the lack of one sense in VR by using another. For example we can use a sound to replace the sense of touch, or a visual effect to replace the lack of audio.

In projection based systems you can carry things with you, for example a PDA which gives you handwriting recognition, or a hand-held physical menu system

In fish tank VR you have access to everything on your desk which can be very important.

Hiding the interface in the environment

Depending on the virtual environment it may be

preferable to 'hide' the interface in the environment itself to

avoid the use of menus.

Before next

class please read the following paper

Project

1 Presentations

For the Tuesday after the

Project 1 presentations please read the following paper: