Networked Scene Graph (NSG)

Overview

The task is to come up with a mechanism

that allows existing applications to run across a cluster of workstations. Each

node on the cluster runs its own copy of the application and data, rendering

only a specific view of the application. One of the nodes in designated the

master and the rest are slaves. The master handles any user-interaction and

propagates its scene graph changes to the slaves through the CAVERN dbserver.

The NSG API defines the protocol for this uni-directional communication. The

core of the API is kept independant of any particular scene-graph API. The communication

is characterized by command strings across the network. Eg a transformation

command string has the form "NSGTRANSFORM [nodeName] [matrix]". Other

commands could be added such as file loading, switch, sequences etc. Presently,

only the transformation and Cave Navigation command have been implemented.

I have used Limbo to test this API.

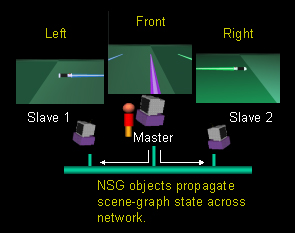

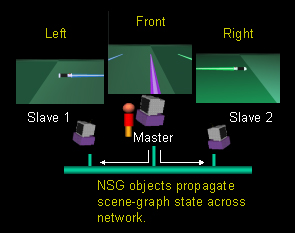

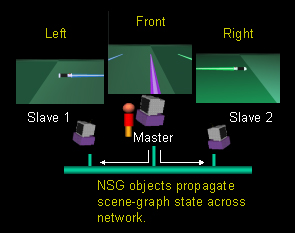

The diagram below shows a snapshot of the execution. In this example, the Master

renders the front wall while the Slaves 1 and 2 render the left and right wall

respectively. These are specified using the -wall command-line option defined

in CAVElib.

Class Diagrams

Here is the class diagram and description

of the classes implemented.

- NsgCmd

- Abstract class that encapsulates

a command. Each command has an identifier token string and methods to input/output

and execute itself. So, for each command(transform, switch etc ) we define,

we need to inherit from this class and override these virtual functions.

- NsgTransformCmd

- Encapsulates a transformation

command. Contains the transformation as a 16-float array and the associated

node-indentifier. It also overrides the base class functions to read/write/execute

itself.

- NsgCAVENavCmd

- This is the command responsible

for sharing CAVE Navigation transform between the nodes. The navigation is

stored as a 16-float array. This is just a temporary hack. The distributed

CAVElib should be used to share tracking/navigation.

- NsgCmdRegistry

- A Singleton object with a map

associating a command identifier with its implementation of NsgCmd. Eg the

command "NSGTRANSFORM" is mapped to NsgTransformCmd. The registry

has functions for lookup/registering commands. The NsgCmd's, on creation,

register themselves automatically with the registry.

- NsgCmdInterpreter

- Inherited from db Shared state.

Observes a CAVERNdb_client and is triggered by received network commands.

It used the first token of the received string to look up the respective NsgCmd

in the registry, and it then makes the command execute. It is also responsible

for placing commands on the network.

- NsgInterface

- Abstract class that has functions

that execute each NsgCmd. Every NsgCmd has an instance of this class. When

the NsgCmd is executed by the NsgCmdInterpreter, it calls the respective functions

in the NsgInterface. Eg the NsgTransformCmd would call the doTransform function.

Your application should implement this NsgInterface and override all the commands,

if not using NsgPfInterface.

- NsgPfInterface

- While all the classes so far have

been independant of any scene-graph API, this is the class that serves as

a bridge between a performer app and NSG. Using this class in your app shields

you from the rest of the NSG classes. You need to attach the pfNodes that

you want to share and call process every frame. Any updates to those nodes

will be synchronized

API Docs

Limbo example

This section explains the changes

made to limbo in order to use NSG. One instance acts as the master while the rest are slaves(which

is by default). To act as master, the line "MASTER" should be added

in the .lbo config file.

CAVERN_perfLimbo_c has a new member

- master which

is true

if this application instance acts as the master, false

if slave. This is read from the config

file.

This general purpose API obviates

the need for pfNetDCS. pfDCS should be used instead. You need to create an instance

of NsgPfInterface as shown below.

limboSaber_c.hxx

|

class limboSaber_c : public CAVERN_perfLimbo_c

{

<--snipped-->

bool master;

NsgPfInterface* nsg;

}

|

The nodes that need to be shared

need to be registered with nsg. In the case of Limbo, the 3 sabers, the wand

dcs and the CAVE Nav dcs are attached. At every frame. the process function

for NsgPfInterface is called. Since only the master handles any user-interaction,

that should also be checked for in the limboSaber_c::process.

limboSaber_c.cxx

|

void limboSaber_c::init( int argc, char** argv )

{

<-- snipped -->

nsg = new NsgPfInterface(modelClient,"Limbodb","nsg");

nsg->attachNode(saber1);

nsg->attachNode(saber2);

nsg->attachNode(saber3);

nsg->attachNode(mainWandDCS);

nsg->attachCAVENav(mainNavDCS);

}

void limboSaber_c::process()

{

// Give it a few cycles to stop messages from queueing up.

for(int i = 0; i < 5; i++) modelClient->process();

if (master) {

CAVERN_perfLimbo_c::process();

picker->process();

nsg->process();

}

}

|

TODO's

- Instead of having to add all the

nodes to be shared, only the scene should be registered . On process, NsgPfInterface

should traverse the scene and depending on the pfNode types use the appropriate

NsgCmd's for transmission across the network. The nomenclature

we discussed earlier will be used to identify the nodes.

- Currently, I use the CAVElib option

-wall to specify the wall each instance should render. Should be able to specify

no of rendering nodes and arbitrary wall/viewport dimensions for each node

in the config file.

- Integration with distributed CAVElib

to share tracking and navigation.

- Address collaboration between

applications and latejoiners

Download

Coming soon...

Shalini 6/11/2001