PhD Prelim Announcement: Visual Computing Design for Explainable, Spatially-aware Machine Learning

December 6th, 2023

Categories: Applications, Software, Visualization, Deep Learning, Machine Learning, Data Science

About

PhD Student: Andrew Wentzel

Committee members:

Dr G.Elisabeta Marai (chair)

Dr. Xinhua Zhang

Dr. Fabio Miranda

Dr. Guadalupe Canahuate (University of Iowa)

Dr. Renata Raidou (Vienna University of Technology)

Date Time: Wednesday, Dec 6th at 10 am CST

Location: ERF 2068

Zoom Details:

Join Zoom Meeting

Meeting ID: 834 9903 7419

Passcode: L1fCBhu9

One tap mobile

+13126266799,,83499037419#,,,,*43232065# US (Chicago)

+13092053325,,83499037419#,,,,*43232065# US

Abstract:

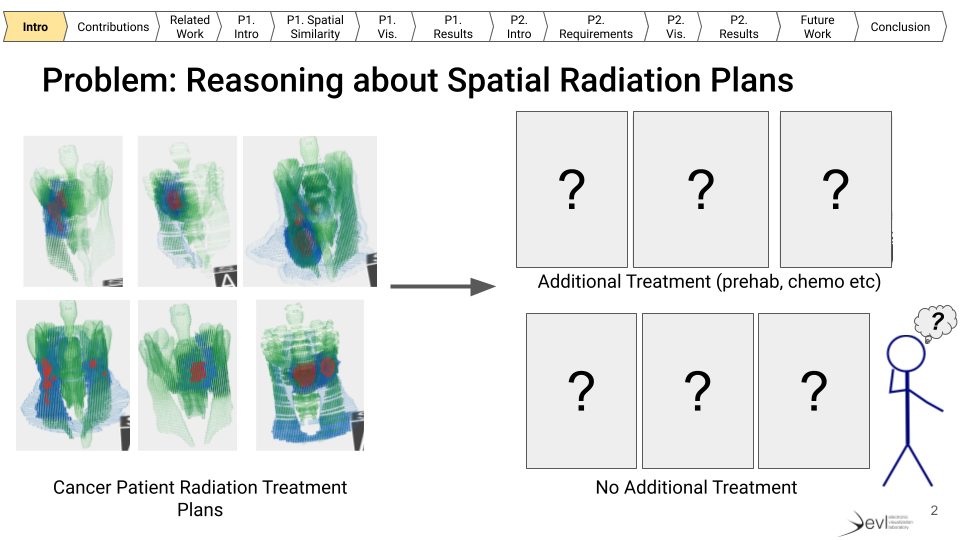

Research in visual computing (VC) focuses on integrating visual representations of datasets with computing systems, thus leveraging the complementary abilities of humans and machines. The resulting VC systems may include machine learning (ML) techniques, which have remarkable abilities in terms of processing vast amounts of data. However, creating VC+ML systems that can operate on spatio-temporal data is a largely unexplored, difficult topic. Challenges in this area include: capturing domain characterizations for spatial VC+ML problems, in particular when serving both ML model builders and ML model clients; creating explainable VC+ML models that can operate on spatial data, including measures that can capture spatial structure similarity; designing visual encodings for ML models that use spatial information and that enhance the modeler and client understanding of the model; and measuring the effect of deploying such VC+ML systems in practice.

Based on several multi-year collaborations, I document several instances where spatial and explainable VC+ML solutions are required, and what role spatiality plays in these instances. Throughout this process, I extract and describe design requirements for explainable spatial VC+ML systems, and I identify necessary VC advances, including spatially-aware similarity measures and spatial visual explainability. I construct spatially-aware similarity measures to support ML problems in precision oncology, patient risk stratification in data mining, and explainable interactive regression modeling for hypothesis testing in social science. I introduce novel encodings for 3-dimensional and geospatial data and explore how to integrate such encodings with ML model explanations. I implement and evaluate several VC+ML systems and several resulting models that are explicitly designed to integrate domain-specific structural spatial information and unsupervised ML. Throughout this process, I also articulate design lessons related to model actionability. In my proposed work, I expand my focus to exploring the issue of spatial VC+ML explainability, this time in a supervised deep-learning setting.