Fully Test-time Adaptation for Object Detection

June 18th, 2024

Categories: Software, Deep Learning, Machine Learning, Data Science, Computer Vision

Authors

Ruan, X., Tang, W.About

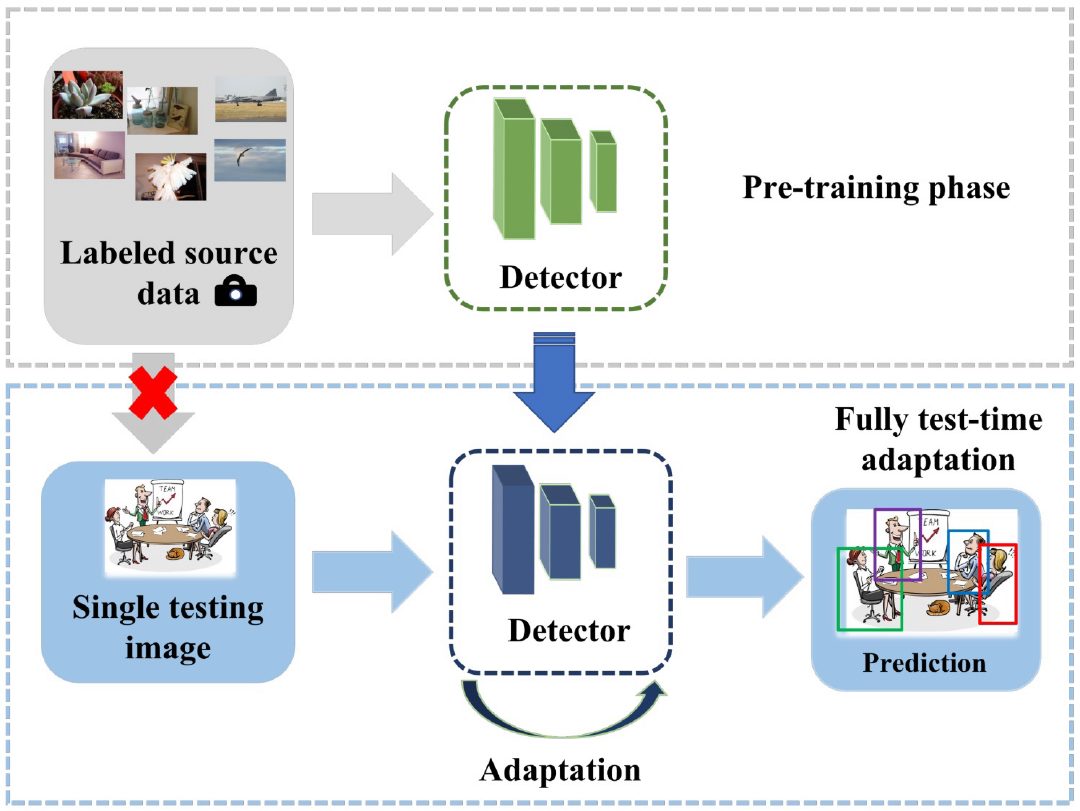

Though the object detection performance on standard benchmarks has been improved drastically in the last decade, current object detectors are often vulnerable to domain shift between the training data and testing images. Domain adaptation techniques have been developed to adapt an object detector trained in a source domain to a target domain. However, they assume that the target domain is known and fixed and that a target dataset is available for training, which cannot be satisfied in many real-world applications. To close this gap, this paper investigates fully test-time adaptation for object detection. It means to update a trained object detector on a single testing image before making a prediction, without access to the training data. Through a diagnostic study of a baseline self-training framework, we show that a great challenge of this task is the unreliability of pseudo labels caused by domain shift. We then propose a simple yet effective method, termed the IoU Filter, to address this challenge. It consists of two new IoU-based indicators, both of which are complementary to the detection confidence. Experimental results on five datasets demonstrate that our approach could effectively adapt a trained detector to various kinds of domain shifts at test time and bring substantial performance gains. Code is available at https://github.com/XiaoqianRuan1/IoU-filter. Code is available at https://github.com/ XiaoqianRuan1/IoU-filter.

Funding:: The COMPaaS DLV project (NSF award CNS-1828265)

Resources

URL

Citation

Ruan, X., Tang, W., Fully Test-time Adaptation for Object Detection, International Journal of Computer Vision (2024): 1-17, pp. 1038-1047, June 18th, 2024. https://cvpr.thecvf.com/virtual/2024/workshop/23588