Performance Characterization and Tuning of Non-uniform All-to-all Data Exchanges (poster)

May 8th, 2025

Categories: Applications, Supercomputing, Data Science, High Performance Computing

Authors

Qi, K., Fan, K., Kumar, S.About

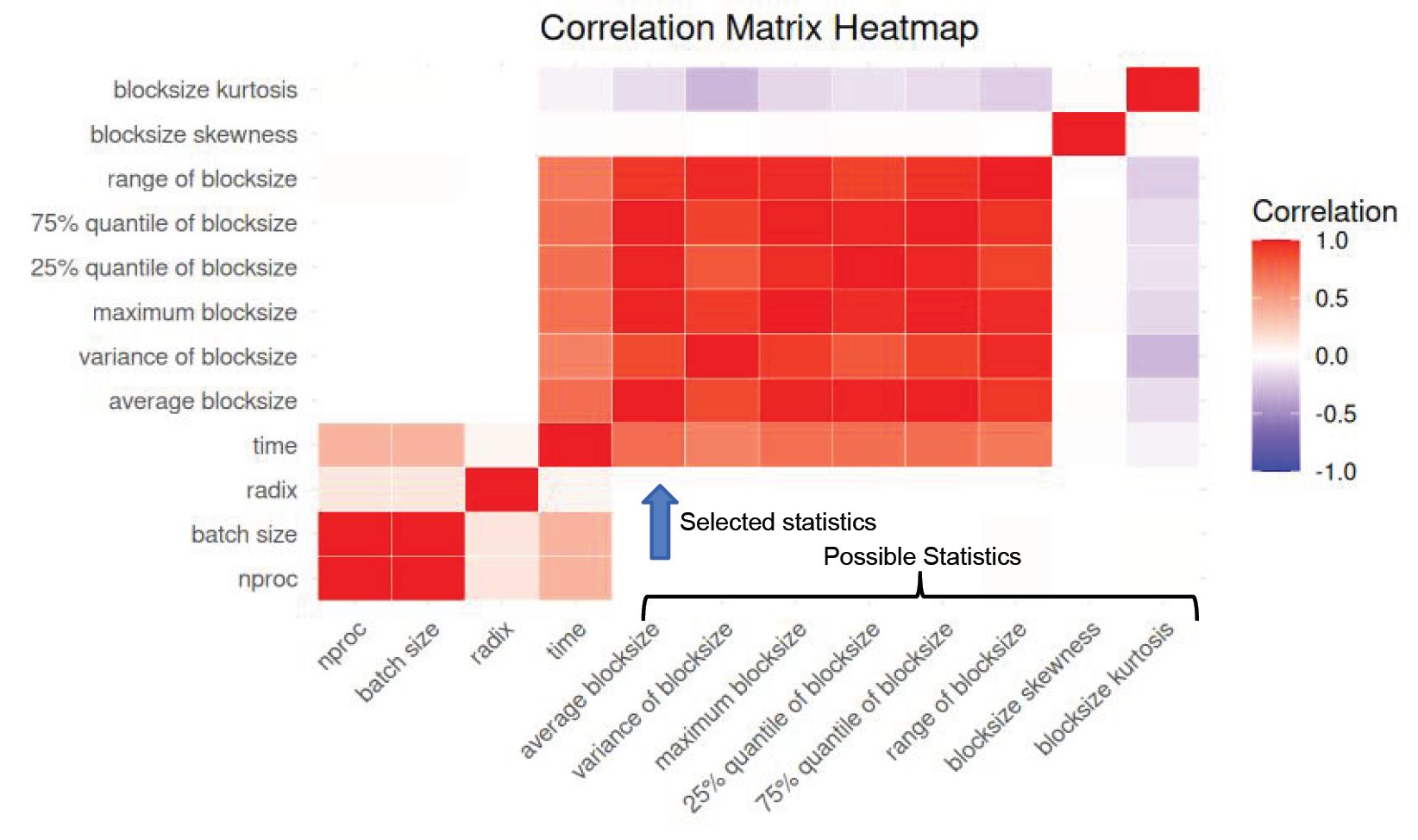

Non-uniform MPI_Alltoallv communication is critical in many high-performance computing (HPC) applications where data exchange patterns vary significantly between processes. However, existing MPI implementations rely on fixed heuristics that are not well-suited for dynamic, irregular workloads, often leading to suboptimal performance. Furthermore, there are customized non-uniform All-to-all functions having performance advantage compared to official MPI Alltoallv, under certain scenarios. Creating a framework which can accurately select the optimal algorithm will be extremely beneficial, but challenging due to its potential complexity.

Currently, there are research works regarding the data-driven approach for tuning MPI functions, but most of them they focus on MPI functions such as MPI_Scatter, MPI_Reduce, MPI_gather, uniform MPI_Alltoall Non-uniform Alltoall is still an under-developed area. One challenge of developing a tuning framework for non-uniform Alltoall is that the blocksizes between processes are not fixed and it introduces significant additional complexity to the tuning workflow.

Resources

URL

Citation

Qi, K., Fan, K., Kumar, S., Performance Characterization and Tuning of Non-uniform All-to-all Data Exchanges (poster), The 12th Greater Chicago Area Systems Research Workshop (GCASR), Chicago, IL, May 8th, 2025. https://gcasr.org/2025/posters