EAGER: Collaborative Research: Articulate: Augmenting Data Visualization With Natural Language Interaction

July 25th, 2014

Categories: Software

About

UIC Computer Science faculty members Barbara Di Eugenio (PI), Andy Johnson (co-PI) and Adjunct CS Professor Leland Wilkinson (co-PI) have been awarded a new NSF grant for one year (August 2014 to July 2015) entitled “EAGER: Collaborative Research: Articulate: Augmenting Data Visualization With Natural Language Interaction.”

The overall project is collaborative with Jason Leigh at University of Hawaii and the total funding is $300,000.

Abstract:

Nearly one third of the human brain is devoted to processing visual information. Vision is the dominant sense for the acquisition of information from our everyday world. It is therefore no surprise that visualization, even in its simplest forms, remains the most effective means for converting large volumes of raw data into insight, a process that can support scientific discovery. However a key challenge hindering scientific users from adopting the latest visualization tools and techniques is the steep learning curve that has to be overcome in order to make use of them. The tendency then is to resort to the simplest tools, such as bar charts and line graphs, even though they may lack the expressive power necessary to bring scientific data into focus.

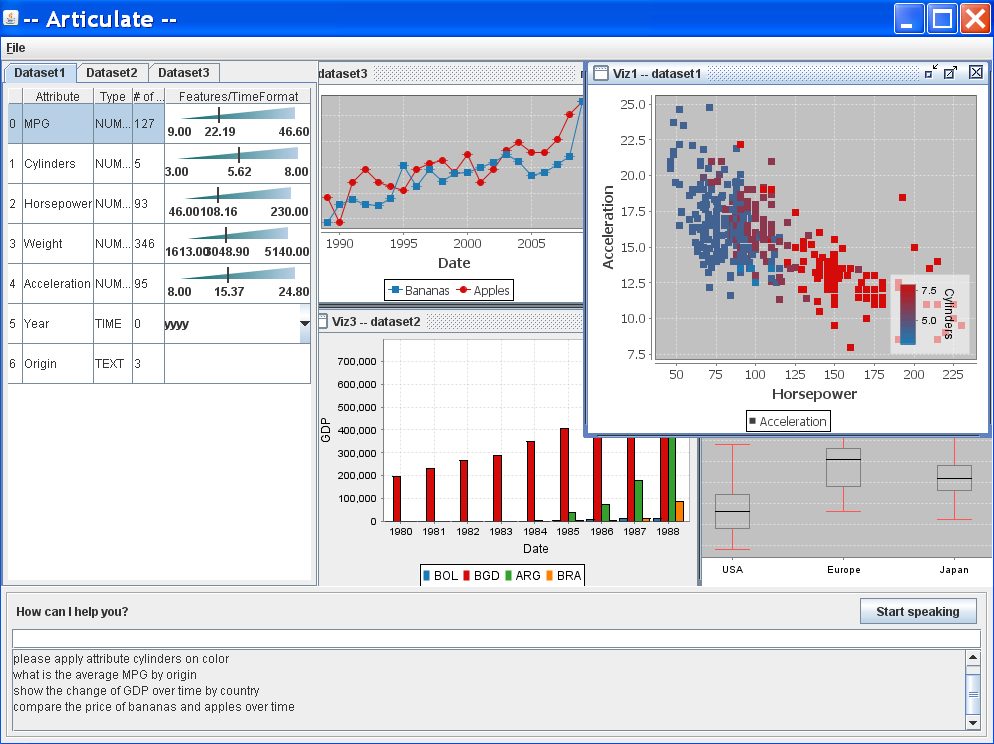

The notion that scientists would ideally like to simply speak with a computer to ask questions about their data, and have the computer automatically generate visualizations that answer their queries, has been well known since at least the NSF 2007 report “Enabling Science Discoveries through Visual Exploration.” This is the motivation for the current project, which involves a collaboration among researchers at two institutions, given that scientists still are unable to do so. The PIs’ ultimate goal is to implement a Virtual Visualization Expert to translate the language of science into the language of visualization. To demonstrate the concept is indeed viable, the PIs previously developed and evaluated a small prototype, which supported their argument that by relieving the user of the burden of having to learn how to use a complex interface one could enable them to focus on articulating better scientific questions.

Given this initial success, the focus of this exploratory research is to establish the foundations of a more generalizable approach that can encompass techniques used in scientific visualization. To this end, the PIs will research the steps needed for mapping natural language requests, which may be accompanied by gestures, into meaningful visualizations and for enabling incremental creation and modifications of visualizations. They will develop innovative models to understand the intent of the user and the objects s/he is referring to, and they will explore how best to design user interfaces for creating and modifying visualizations using language and direct manipulation. The PIs’ initial study showed that all these capabilities are crucial to enabling users to make the best use of a dialogic interface for data visualization. Although project outcomes will be geared in the short term to serving the scientific community, the techniques should be applicable more broadly to consumers of information, such as citizen scientists, public policy decision makers, and students.