EVL of UIC Computer Science Department receives new $3 million grant from NSF

September 16th, 2014

About

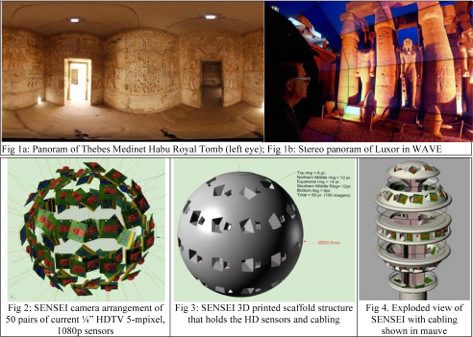

UIC received a $3 million award from the National Science Foundation entitled “Development of the Sensor Environment Imaging (SENSEI) Instrument” for the period October 1, 2014 to September 30, 2017, for UIC to build the SENSEI (SENSor Environment Imaging) instrument that will capture still and motion, 3D full-sphere omnidirectional stereoscopic video and images of real-world scenes, to be viewed in collaboration-enabled, nationally networked, 3D virtual-reality systems.

Congratulations to PI Maxine Brown, Director of the Electronic Visualization Lab (EVL), and to Co-PIs Robert V. Kenyon, Andrew E. Johnson and Tanya Berger-Wolf, who are all UIC Computer Science professors.

Four other institutions will receive subawards from UIC and also contribute on the project: UC San Diego (subaward lead Truong Nguyen), University of Hawai‘i at Mānoa (subaward lead Jason Leigh), Scripps Institution of Oceanography (subaward lead Jules Jaffe) and Jackson State University (subaward lead Francois Modave).

Abstract:

Much of science is observational: scientists observe the environment to learn about behavior, trends, or changes, in order to make informed decisions, adjustments, and allowances. SENSEI images can be used to capture life-sized environments of scientific interest and extract objects’ sizes, shapes and distances. SENSEI will be designed to be replicable by the research community, with open source software and clear hardware designs, including complete 3D printing instructions for the custom sensor mounting scaffolds. SENSEI, given its 9-times-IMAX resolution and ~terapixel/minute flood of imagery, offers big data challenges as well. Specifically, SENSEI will be an integrated, distributed cyber-collaboration instrument with Big attributes: Big Resolution - configurable, portable, sensor-based camera systems; Big data - computational and storage systems; Big Displays - for 3D stereoscopic viewing; and Big Networks - for an end-to-end, tightly coupled, distributed collaboration system. The SENSEI instrument will provide hardware scaffolding for holding its sensor arrays, the data acquisition and computing platforms, telemetry and communications, as well as software.

SENSEI will produce 4-pi steradian (fully surround) stereo video with photometric, radiometric, and photogrammetric capability for researchers in astronomy, biology, cultural heritage, digital arts, earth science, emergency preparedness, engineering, geographic information systems, manufacturing, oceanography, planetary science, archaeology, architecture, and science education. It is also intended to be an instrument of computer science and electrical engineering discovery with its variable arrays of sensors amenable to custom configurations; it will provide the means for mounting, pointing, triggering and synchronizing high-resolution sensors, as well as the flexible computational capability needed to ramp up the necessary image processing several orders of magnitude.