SENSEI: Sensor Environment Imaging (SENSEI) Instrument

October 1st, 2014 - September 30th, 2019

Categories: Applications, Devices, Multimedia, Networking, Software, User Groups, Video / Film, VR

About

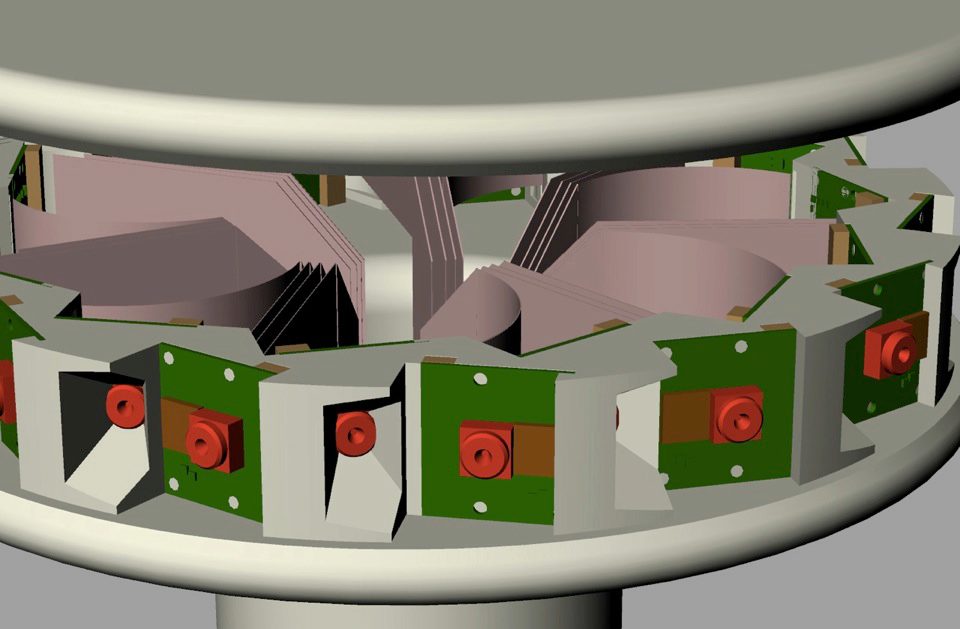

SENSEI will be a reconfigurable, ultra-high-resolution, spherical (4π steradian), photometric, radiometric and photogrammetric real-time data-acquisition, sensor-based, camera system capable of capturing 3D stereo and still images for viewing in collaboration-enabled, nationally networked, virtual-reality systems.

The ultimate goal of SENSEI will be to capture ultra-high-resolution still and motion 3D stereoscopic video and images of real-world

scenes, that can be viewed in 3D high-performance virtual-reality systems, and can be used to extract objects’ sizes, shapes and distances within a scene.

SENSEI, given its ~terapixel/minute flood of imagery, presents big data challenges as well. Specifically, SENSEI will be an integrated, distributed cyber-collaboration instrument with big attributes:

- “Big Resolution,” configurable, portable, sensor-based camera systems: Sensor-based, dynamic 3D stereoscopic motion/video and/or multi-spectral data camera systems will be developed. They will be flexibly configurable to capture geometries such as: outside-in, inside-out, light field arrays, photogrammetry, macroscopy and microscopy. SENSEI will have an optional weatherproof transport container for field use and underwater cases developed by partners. SENSEI will create images that are ~9 times IMAX resolution. Open source software and wikis will encourage adoption and modification by other domain scientists, as with our OptIPortals and CAVEs from prior MRIs.

- “Big data” computational and storage systems: Graphics processing unit (GPU) computing with RDMA, fast storage systems, and software development are needed to perform the necessary image processing calibration, stitching, synchronization, partitioning, and video serving/streaming.

- “Big displays” for 3D stereoscopic viewing: Virtual-reality displays, such as the CAVE2, WAVE,

and similar technologies, are ideal for end-user viewing and multi-user, multi-site collaboration. The next generation of 4K 3D OLED TVs and LCD TVs, singly or in small arrays, will also be supported.

- “Big networks” for an end-to-end, tightly coupled, distributed system: The distributed system -

cameras, computational and storage systems, and display technologies - will use 10/40/100Gbps optical networks at/between partner institutions to share and correlate data in useful time frames.

SENSEI can advance computer science and electrical engineering disciplines in the development of image capture systems, display technologies, image processing, visualization, and image creation, and advance scientific domain disciplines such as archaeology, architecture, astronomy, biology, cultural heritage, digital arts, earth science, emergency preparedness, engineering, geographic information systems, manufacturing, oceanography, planetary science, and science education.

SENSEI partner institutions are:

- University of Illinois at Chicago (UIC), Electronic Visualization Laboratory (EVL)

- Jackson State University (JSU), College of Science, Engineering and Technology

- Louisiana State University (LSU), Computer Science Department (Beginning in Year 2)

- Scripps Institution of Oceanography (SIO), Jaffe Laboratory for Underwater Imaging

- University of California, San Diego (UCSD), Calit2-Qualcomm Institute (Calit2-QI)

- University of Hawaii at Manoa (UHM), Lab for Advanced Visualization & Applications (LAVA)

UIC, UCSD, LSU and SIO are the primary technology developers of SENSEI. JSU and UHM, as well as the developer sites, have application teams who will be early adopters of SENSEI and provide feedback on the workflow, operation, and use.