PhD Dissertation Announcement: “Multimodal Situated Analytics (MuSA) for Analyzing Conversations in Extended Reality”

April 24th, 2024

Categories: Applications, MS / PhD Thesis, Software, Visualization, VR, Mixed Reality, Data Science

About

Candidate: Ashwini G. Naik

Committee Members:

Dr. Andrew Johnson (Advisor and Chair)

Dr. Robert Kenyon (Co-Advisor)

Dr. Debaleena Chattopadhyay

Dr. Nikita Soni

Dr. Steve Jones (External Member - Dept. of Communication)

Date and Time: Wednesday, April 24th, 2024, 10 AM - 12 PM AM CST

Location: ERF 2036

Abstract:

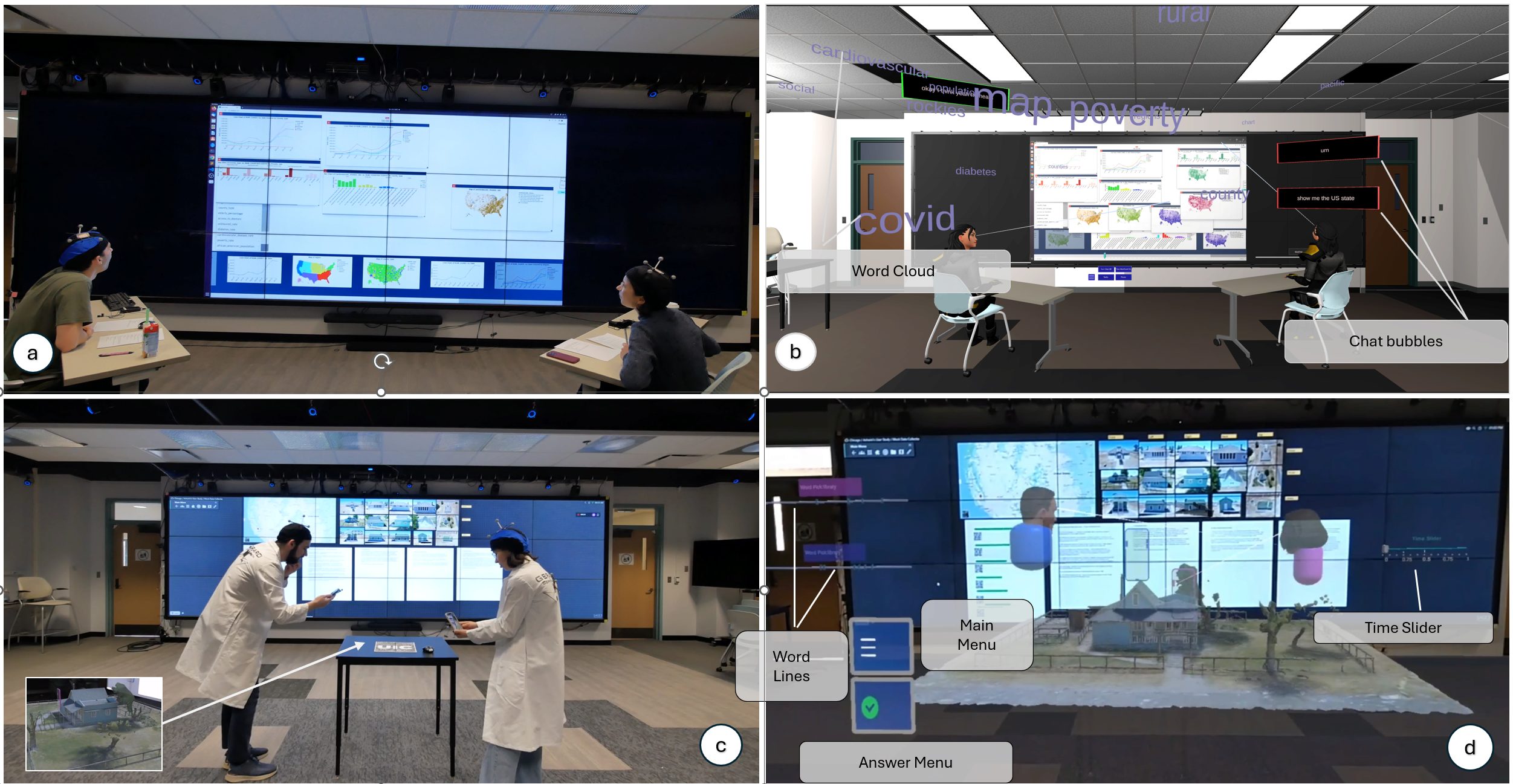

Meetings and conversations today are multimodal in nature, including the usage of diverse technologies in the form of text, video, images, voice, AI agents, and also immersive technologies. If it were possible to replay important multimodal conversations in their spatial and chronological contexts, it would benefit several audiences, such as - researchers to study and analyze multimodal conversations, novices in any field who could learn from experts, experts who have missed being part of crucial conversations. However, recreating such multimodal interactions requires integrating all of the modalities used in the conversation. Immersive environments provide a convenient setting to present data with its spatial and temporal referents through the use of situated analytics. We present MuSA (Multimodal Situated Analytics), an immersive environment prototype, as a way to recreate multimodal conversations for exploration and analysis in immersive environments. Our development pipeline consisted of various stages such as data capturing, data cleaning, data synchronization, prototype building, and deploying the final product to end-user hardware. We conducted two comprehensive user studies to evaluate the usability, user adoption, and space usage during the exploration of multimodal conversations with our application. In the first study, we explore conversations between seated participants (n=12), and in the second, we explore conversations between non-seated moving participants (n=13). We share insights gained during the development phase, as well as empirical results and feedback collected through both user studies.