LASSI-EE: Leveraging LLMs to Automate Energy-Aware Refactoring of Parallel Scientific Codes (poster)

May 8th, 2025

Categories: Applications, Software, Supercomputing, Deep Learning, Machine Learning, Data Science, Artificial Intelligence, High Performance Computing

Authors

Dearing, M.T., Tao, Y., Wu, X., Lan, Z., Taylor, V.About

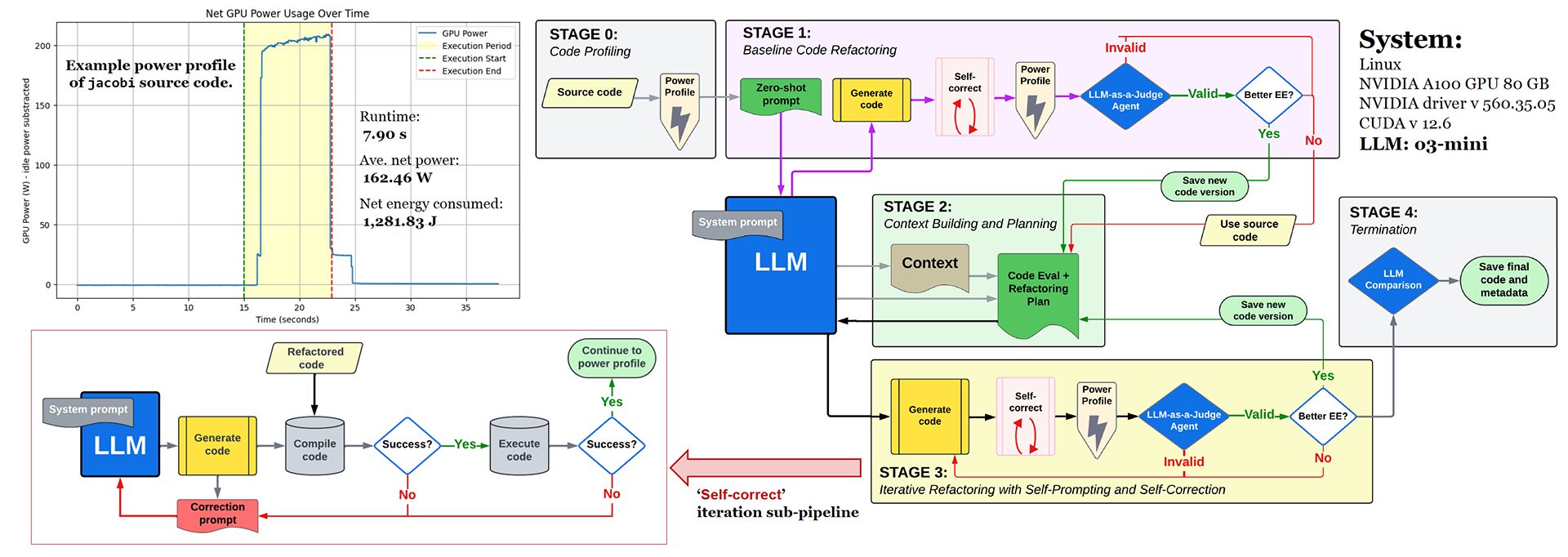

While large language models (LLMs) are increasingly used for generating parallel scientific code, most current efforts emphasize functional correctness, often overlooking performance and energy considerations. In this work, we propose LASSI-EE, an automated LLM-based refactoring framework that generates energy-efficient parallel code on a target parallel system for a given parallel code as input.

Through a multi-stage, iterative pipeline process, LASSI-EE achieved an average energy reduction of 47% across 85% of the 20 HeCBench benchmarks tested on NVIDIA A100 GPUs. Our findings demonstrate the broader potential of LLMs, not only for generating correct code but also for enabling energy-aware programming. We also address key insights and limitations within the framework, offering valuable guidance for future improvements.

Resources

URL

Citation

Dearing, M.T., Tao, Y., Wu, X., Lan, Z., Taylor, V., LASSI-EE: Leveraging LLMs to Automate Energy-Aware Refactoring of Parallel Scientific Codes (poster), The 12th Greater Chicago Area Systems Research Workshop (GCASR), Chicago, IL, Chicago, IL, May 8th, 2025. https://gcasr.org/2025/posters