EMMA: Efficient Multi-node Memory-aware AllReduce Algorithms (poster)

May 8th, 2025

Categories: Applications, Supercomputing, Data Science, Artificial Intelligence, High Performance Computing

Authors

Guerrini, V., Fan, K., Kumar, S.About

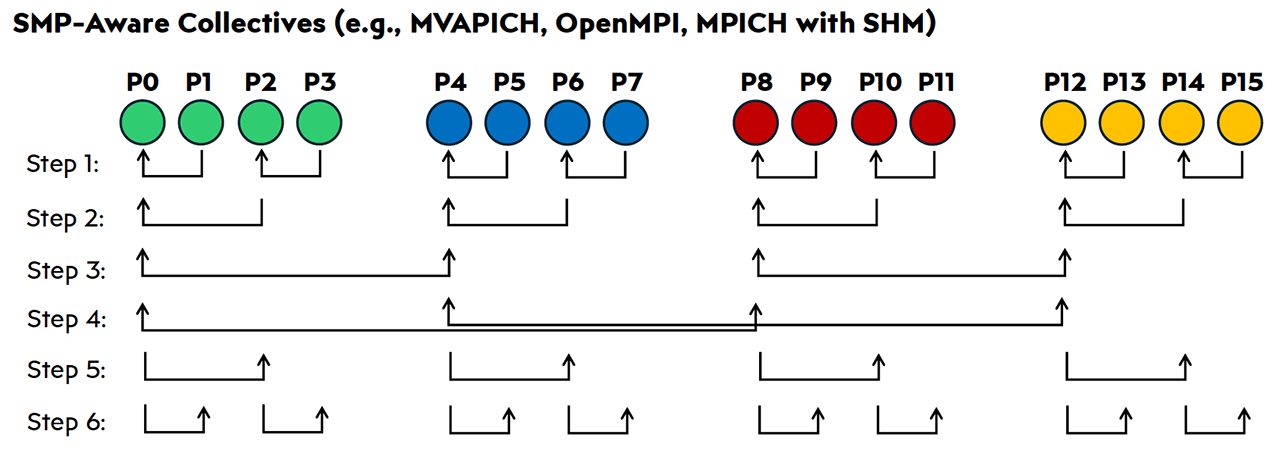

AllReduce is a critical collective in both HPC and large-scale AI workloads. However, scaling it to Exascale systems presents key challenges due to inter-node communication bottlenecks and underutilization of intra-node resources like shared memory and NVLink. This work analyzes state-of-the-art AllReduce algorithms to identify inefficiencies and opportunities for hybrid strategies that explicitly separate intra- and inter-node communication.

We introduce a preliminary algorithmic design that leverages tunable intra-node communication patterns and discuss key performance criteria, including message count and data volume. Our early results provide insight into communication trade-offs and guide the development of adaptive AllReduce implementations optimized for Exascale systems.

Resources

URL

Citation

Guerrini, V., Fan, K., Kumar, S., EMMA: Efficient Multi-node Memory-aware AllReduce Algorithms (poster), The 12th Greater Chicago Area Systems Research Workshop (GCASR), Chicago, IL, May 8th, 2025. https://gcasr.org/2025/posters