Real-Time 3D Head Position Tracker System With Stereo Cameras Using a Face Recognition Neural Network

January 15th, 1996 - August 25th, 2004

About

Creating credible virtual reality (VR) computer-generated worlds requires constant updating of the images in all displays to have the correct perspective for the user. To achieve this, the computer must know the exact position and orientation of the user’s head.

Examples of current techniques for addressing head tracking include magnetic and acousto-inertial trackers, both requiring the user to wear clumsy head-mounted sensors with transmitters and/orwires.

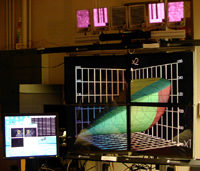

This thesis describes a two-camera, video-based, tetherless 3D head position tracker system specifically targeted for both autostereoscopic displays and projection-based virtual reality systems. The user does not need to wear any sensors or markers.

The head position technique is implemented using Artificial Neural Networks (ANN), allowing the detection and recognition of upright, tilted, frontal and non-frontal faces in the midst of visually cluttered environments. In developing a video-based object detector using machine learning, three main sub-problems arise: first, images of objects such as faces vary considerably with lighting, occlusion, pose, facial expression, and identity.

Second, the system has to deal with all the variations in distinguishing objects (faces) from non-objects (non-faces). Third, the system has to recognize a target face from other possible faces so it can identify the correct user to track.

This thesis introduces some solutions to problems in the face detection / recognition domain. For example, it discusses several Neural Networks (NN) per left and right channel, one for recognition, one for detection and one for tracking; real-time NN face and background training (new users have to spend only 2 minutes training before being able to use the system); infrared (IR) illumination (to further reduce image dependency cause by room lighting variation) and global image equalization (in place of local); algorithms highly tuned for the Intel Pentium IV vector processor; and a prediction module to achieve faster frame rates once a face is been recognized.

The goal is to reach real-time tracking, in our case 30 frames per second at 640 by 480 video-image resolution. The system has been evaluated on an ongoing autostereoscopic “Varrier” display project achieving 30 frames per second (fps) at 320x240 video-image resolution and 90% tracking position rate. In addition, this dissertation also includes previous work in face detection from which the current 3D tracker system is derived.