LambdaTable

August 1st, 2004 - June 17th, 2009

Categories: Devices, Visualization

About

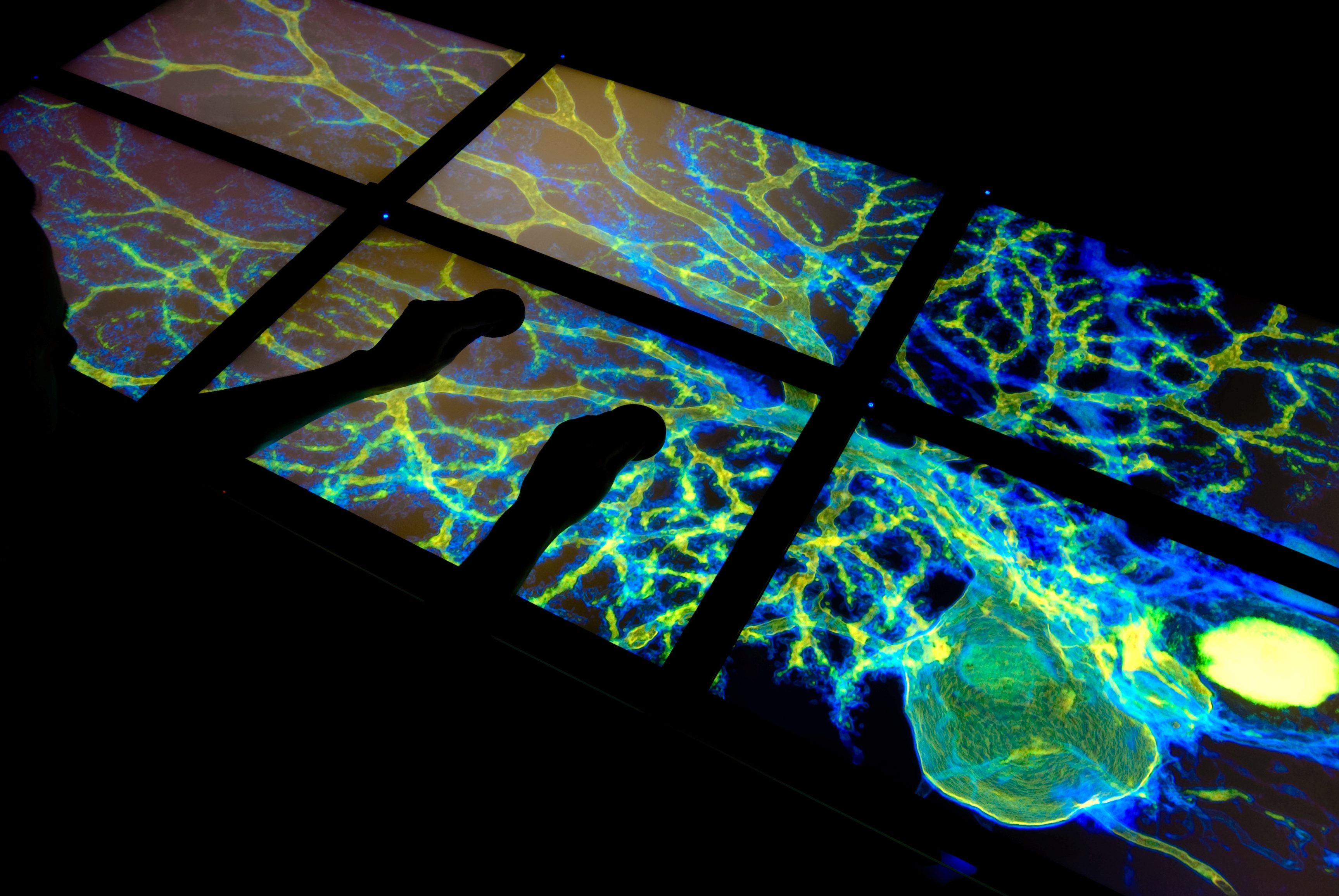

The LambdaTable is a tiled LCD tabletop display connected to high-bandwidth optical networks that supports interactive group-based visualization of ultra-high-resolution data.

The camera tracking system for the LambdaTable is a scalable multi-camera computer vision architecture designed to track input from many simultaneous users interacting with a variety of different interface devices.

Hardware and Camera Setup

The user interface employs cameras mounted overhead to track objects that are identified by a unique pattern of embedded infrared LEDs. IR-pass filters fitted to the camera block visible light, making the LEDs easy to identify.

A methodology was devised to generate triangular patterns by varying the angles and lengths between vertices.

This produces more than thirty configurations that can be disambiguated despite varying rotation, and can be resolved to a specific orientation. Each pattern consists of a base triad, and variants to depict user input states.

This makes it possible to track numerous devices simultaneously, to determine the orientation and position of each device, and provides a method for indicating user interaction, such as button presses.

Currently, four “mouse” and “puck” style interface devices have been built using the triangular LED arrangements. Pointgrey Flea and Bumblebee cameras with resolutions of 640x480 and frame-rates up to 60 frames per second have been employed in both single and dual camera configurations.

In the course of designing different configurations for the LambdaTable, a set of guidelines were developed for purchasing and configuring the cameras of the tracking system.

These guidelines evolved into a model that can be employed for any table configuration in order to determine the number and arrangement of cameras required to achieve a desired precision.

This model can be applied to configure cameras for any size table system, and has been verified by experimental data.

Image Processing and Tracking Architecture

A real-time software computer vision library was developed to collect and integrate the camera video streams, and deliver the position and orientation of tabletop devices.

The tracking library captures frames from the cameras and passes them through a sequence of image processing elements.The library leverages the Graphics Processing Unit (GPU) on modern graphics cards to accelerate certain image processing routines such as smoothing and segmentation.

Once segmented, a vector of features for each region in the image is generated and used to identify individual LEDs. A fully connected graph is built with a vertex for each LED region.The distance and angle between each LED is represented in the graph edges.

A search through the graph is conducted to discover subgraphs that match the known device patterns, revealing the identity, position and orientation for each device on the table.

The tracking data for each camera is then merged into a common coordinate system, and passed via a stream of UDP messages to the table application.

This permits any application written for a table display to integrate the tracking system as long as it has access to a network adapter.