Designing an Expressive Avatar of a Real Person

September 20th, 2010

Categories: Applications, Human Factors, MS / PhD Thesis, Software, VR

Authors

Lee, S., Carlson, G., Jones, S., Johnson, A., Leigh, J., Renambot, L.About

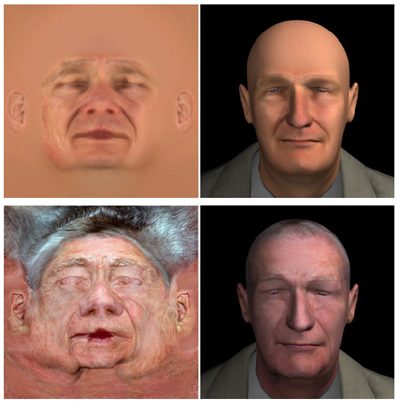

The human ability to express and recognize emotions plays an important role in face-to-face communication, and as technology advances it will be increasingly important for computer-generated avatars to be similarly expressive. In this paper, we present the detailed development process for the Lifelike Responsive Avatar Framework (LRAF) and a prototype application for modeling a specific individual to analyze the effectiveness of expressive avatars. In particular, the goals of our pilot study (n = 1,744) are to determine whether the specific avatar being developed is capable of conveying emotional states (Ekmanös six classic emotions) via facial features and whether a realistic avatar is an appropriate vehicle for conveying the emotional states accompanying spoken information. The results of this study show that happiness and sadness are correctly identified with a high degree of accuracy while the other four emotional states show mixed results.

Resources

Citation

Lee, S., Carlson, G., Jones, S., Johnson, A., Leigh, J., Renambot, L., Designing an Expressive Avatar of a Real Person, Proceedings of the 10th International Conference, IVA 2010, vol 6356, Philadelphia, PA, USA, Springerlink, pp. 64-76, September 20th, 2010.