Video Avatars

January 1st, 1996 - December 31st, 1998

Categories: Software, Tele-Immersion

About

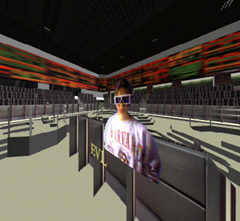

Tele-immersive virtual applications utilize avatars to represent users in remote environments, primarily to aid in communication during collaborative sessions, so participants know their counterpart’s location, orientation, and in the case of real-time video avatars, some suggestion of emotion through facial gestures.

Networked tracker information provides for accurate avatar positioning within the environment (translation and rotation), so users easily understand their relationship within the shared virtual space.

Various forms of avatars have been used within EVL - from “puppet” avatars (3D computer graphic models), to 3D static and real-time video avatars displayed in 2 or 2 1/2 dimensions.

In the case of 3D static video avatars, the participant’s complete body is captured as they rotate on a turntable; these images are then processed and prepared into a “movie”, from which frames are displayed to provide the avatars correct location and orientation in stereo within the 3D environment. The avatar appears as a motionless 3-D wax figure in the virtual space.

2D video avatars consist of real-time video texture mapped onto a flat polygon or with the background “cut-out” and displayed within the 3D space. Although the video avatar is just a texture on a flat polygon, removing the background makes the avatar appear convincingly immersed in the 3D environment.

In the preliminary implementation of the 2D video avatar system, two networked ImmersaDesks (IDesk) were used to allow for better lighting control required for image capture and background segmentation (chroma keying).

The real-time video is captured using a camera mounted on top of each IDesk screen and the SGI Onyx Sirius video hardware. Each user is positioned in front of a blue or green background, which is eliminated using traditional video chroma keying techniques.

The captured video is compressed, sent over the network, decompressed and textured onto a polygon positioned and oriented correctly within the virtual environment based on the remote user’s tracking information. The same procedure occurs both ways. Each user’s audio is also captured and sent over the network simultaneously.

In the case of 2 1/2D video avatars, the video image is projected onto a static head model using a projective texture matrix. This allows the view from the camera to be mapped back onto the head model. This technique eliminates the need for specialize lighting / backgrounds; thus simplifying some technical aspects of the system.

Initial research utilized a generic head model, which yielded some visual artifacts such as stretching and distortions to facial features. Current research uses a laser scanner to capture a 3D head model, onto which the captured video image is registered.

Results are extremely effective. Research to improve this technique is ongoing.