PARIS™ : Personal Augmented Reality Immersive System

September 1st, 1998 - October 31st, 2002

Categories: Devices

About

Twenty years ago, Ken Knowlton created a see-through display for Bell Labs using a half-silvered mirror mounted at an angle in front of a telephone operator.

The monitor driving the display was positioned above the desk facing down so that its image of a virtual keyboard could be superimposed on the operator’s hands working under the mirror. The keycaps on the operator’s physical keyboard could be dynamically relabeled to match the task of completing a call as it progressed.

Devices that align computer imagery with the user’s viewable environment, like Knowlton’s, are examples of augmented reality, or see-through VR.

More recently, researchers at the National University of Singapore’s Institute of Systems Science built a stereo device of similar plan using a Silicon Graphics’ monitor, a well-executed configuration for working with small parts in high-resolution VR.

Neither of these systems provides tracking, but rather assumes the user to be in a fixed and seated position.

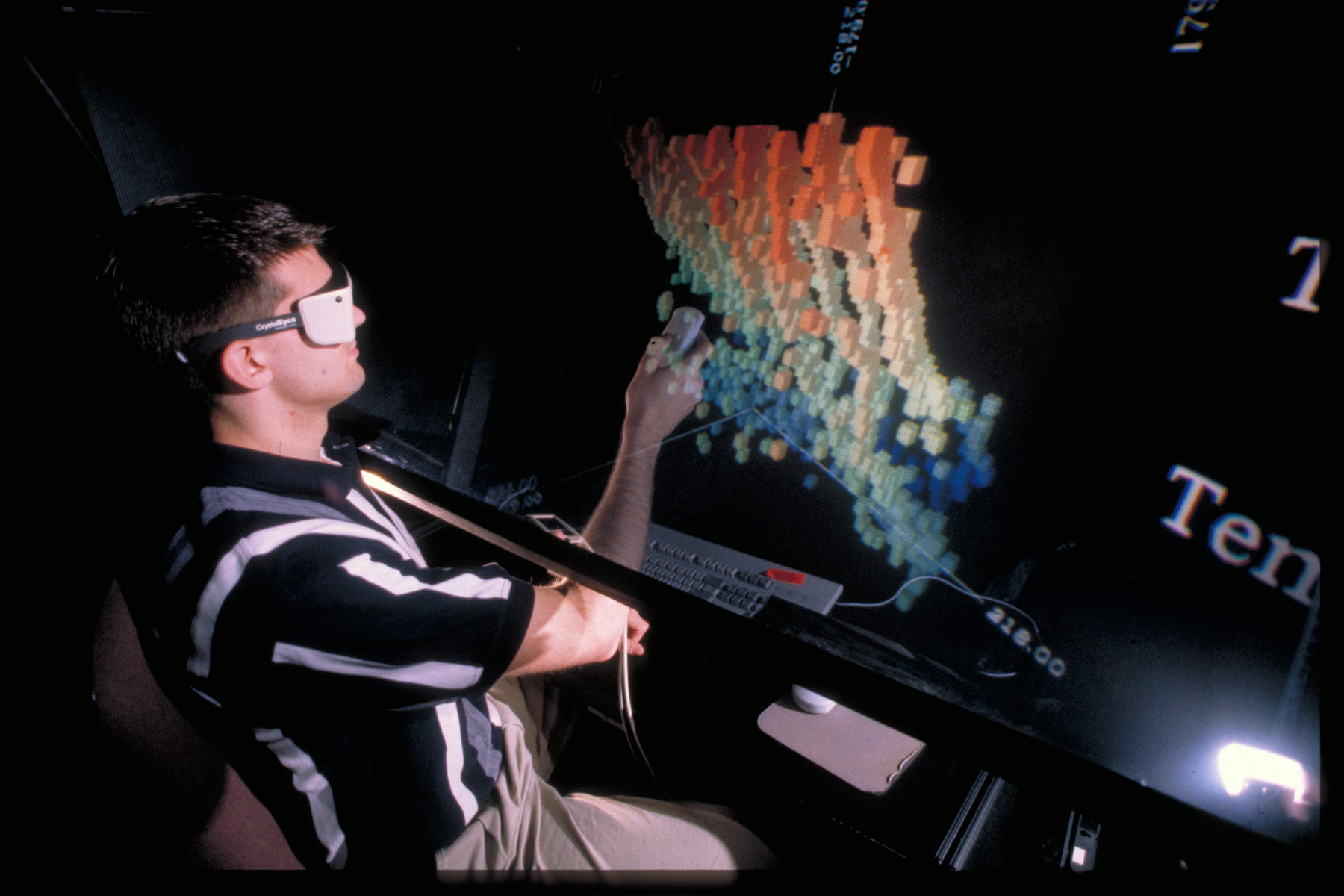

EVL is using projection technology to prototype PARIS™, a third-generation version of this concept, optimized to allow users to interact with the environment using their hands or a variety of input devices such as - haptic (touch) displays like the Phantom and force-feedback gloves.

The PARIS™ display has excellent contrast and variable lighting which allows hands to be seen immersed in the imagery. The current PARIS™ production prototype employs the Christie Mirage 2000 DLP projector and a double mirror-fold to compactly and brightly illuminate the overhead black screen.

This configuration is well suited to the lighting conditions of a typical office environment. The device can be easily packed, moved and deployed.

New modalities (such as vision, speech and gesture) are being explored as human-computer interfaces for the PARIS™ system.

Gesture recognition can come from either tracking the user’s movements or processing them using video camera input. Gaze direction, or eye tracking, using camera input is also possible.

Audio support can be used for voice recognition and generation, as well as used in conjunction with recording tele-immersive sessions.

Used together, these systems enable tether-less tracking and unencumbered hand movements for improved augmented reality interaction.

PARIS uses EVL-developed collaboration and networking software CAVERNsoft and Quanta, which enable real-time interactions over very-high-speed networks. CAVERNsoft and Quanta run under Irix, Linux and Win9x/NT/2000.

PARIS™ Hardware Specifications:

- Screen: 80” diagonal rigid high contrast (black)

- Projector: Christie Mirage 2000

- Mirror: 60% reflective

- Vertical FOV: adjustable +/- 15°

- Horizontal FOV at centerline: 120° inclusive

- Tracking: (Ethernet) Ascension pcBIRD® or InterSense IS-900

- Stereo: Alternate (sequential) field type decoded with actively switched eyewear

- Refresh rate: 100Hz

- Dimensions: 5’9” x 2’8” x 6’5” (packed); 5’9” x 6’1” x 7’11” (deployed)

PARIS™ system development and cranial implant research are made possible by major funding to the University of Illinois at Chicago from the National Science Foundation (NSF) Research Infrastructure award EIA-9802090 and Major Research Instrumentation award EIA-9871058, and the Department of Energy (DoE) Accelerated Strategic Computing Initiative (ASCI) program, award B519836. CAVERNsoft and Quanta are funded by NSF awards EIA-9802090, ANI-9730202, ANI-0129527 and the NSF Partnerships for Advanced Computational Infrastructure (PACI) cooperative agreement (ACI-9619019) to the National Computational Science Alliance.