Planetary-scale Terrain Composition

August 1st, 2007 - October 23rd, 2008

Categories: Applications, MS / PhD Thesis, Museums, Software, Visualization

About

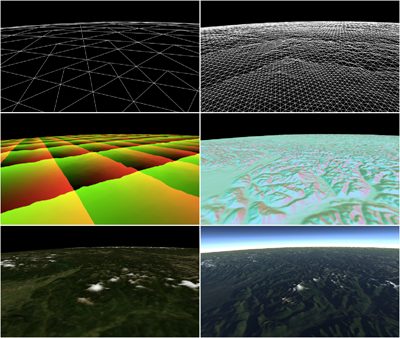

Planetary-scale Terrain Composition is a GPU-centric real-time approach to generating and rendering planetary bodies composed of arbitrary quantities and types of height-map data, textured and illuminated using arbitrary quantities and types of surface-map data. At the core of this approach lies the assertion that modern graphics hardware need not draw a distinction between colors and vectors. Massively parallel vector stream processors allow color computation to be performed with 32-bit floating point precision, merging the processing of color with that of geometry. With the distinction between color and vector blurred, a variety of highly efficient image processing operations become applicable to both terrain geometry and terrain surface maps.

This work demonstrates a mechanism for the real-time manipulation and display of very large scale terrain height and surface data. Beyond simply rendering terrain, this mechanism affords opportunities to combine data in powerful ways, bringing together disparate planetary-scale data sets smoothly and efficiently, and adapting to produce a uniform composite visualization of them.

Many inter-related planetary height map and surface image map data sets exist, and more data are collected each day. Broad communities of scientists require tools to compose these data interactively and explore them via real-time visualization. While related, these data sets are often unregistered with one another, having different projection, resolution, format, and type. This GPU-centric approach to the real-time composition and display of unregistered-but related planetary-scale data, employs a GPGPU process to tessellate spherical height fields. It uses a render-to-vertex-buffer technique to operate upon polygonal surface meshes in image space, allowing geometry processes to be expressed in terms of image processing. With height and surface map data processing unified in this fashion, a number of powerful composition operations may be universally applied to both. Examples include adaptation to non-uniform sampling due to projection, seamless blending of data of disparate resolution or transformation regardless of boundary, and the smooth interpolation of levels of detail in both geometry and imagery. Issues of scalability and precision are addressed, giving out-of-core access to giga-pixel data sources, and correct rendering at sub-meter scales.